Table of Contents

Overview

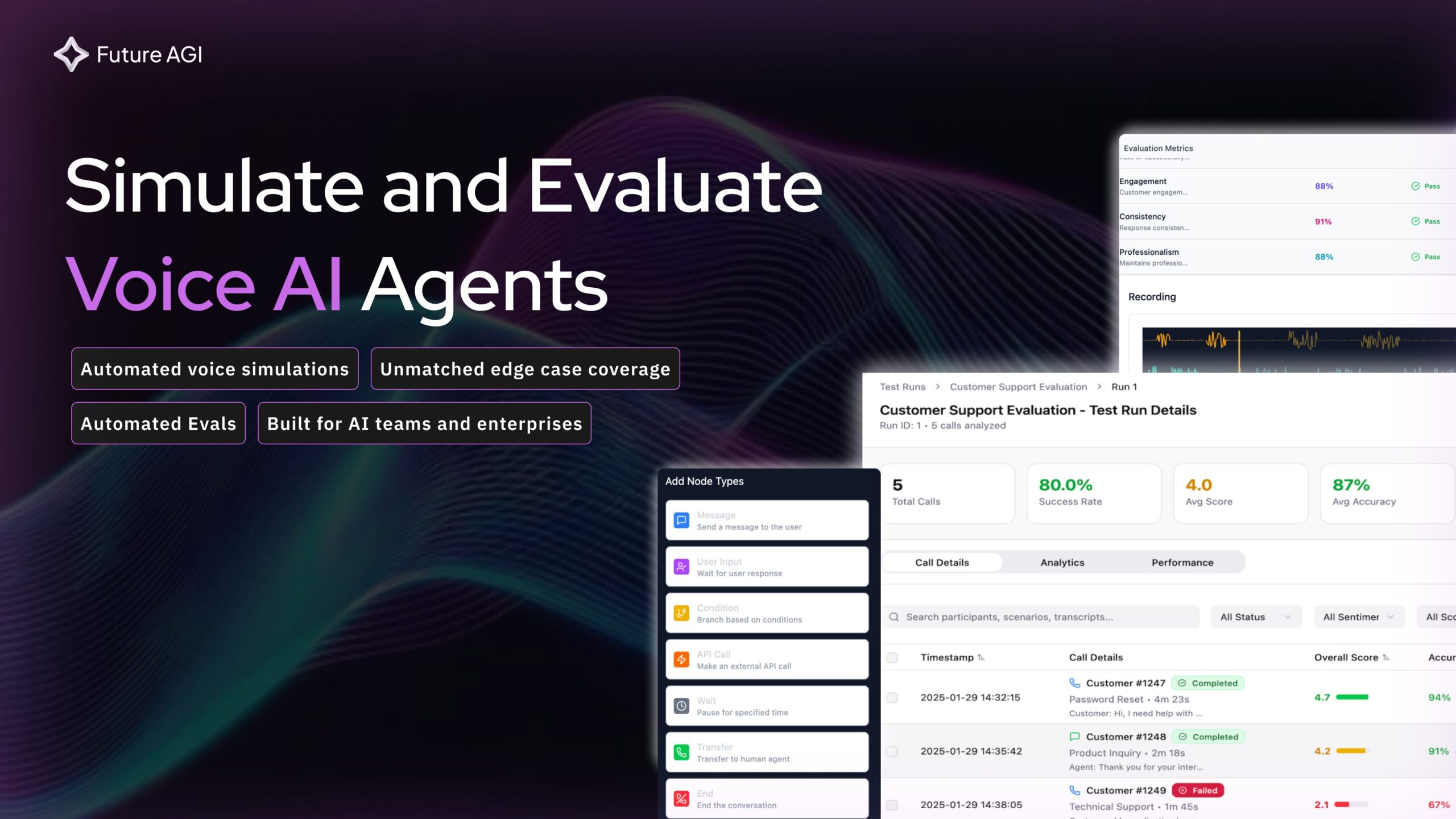

In the rapidly evolving landscape of enterprise voice AI deployment, ensuring comprehensive reliability and performance optimization has become a critical business imperative. Future AGI’s Simulate represents the world’s first dedicated AI-powered testing infrastructure specifically engineered for voice AI applications, addressing a fundamental gap in the AI development lifecycle. This groundbreaking platform revolutionizes voice AI quality assurance by deploying intelligent agent simulations that evaluate voice models across thousands of real-world conversational scenarios within minutes, significantly reducing traditional testing timelines from weeks to hours.

Unlike conventional testing approaches that rely on manual processes or transcript-only analysis, Simulate provides comprehensive audio-first evaluation, analyzing actual voice quality, tone variations, response timing, and conversational flow dynamics. The platform’s sophisticated AI agents simulate diverse user personas, accents, background noise conditions, and edge cases that human testers often miss, ensuring voice AI systems perform reliably across diverse demographic and environmental conditions before production deployment.

Key Features

Simulate delivers a comprehensive suite of AI-powered testing and optimization capabilities designed to streamline voice AI development while maintaining enterprise-grade reliability standards.

- Intelligent Synthetic Dataset Generation: Creates vast, linguistically diverse datasets covering multiple accent patterns, demographic variations, and real-world conversational scenarios, eliminating the need for extensive manual data collection while ensuring comprehensive edge case coverage across global user populations.

- Advanced Prompt and Model Experimentation: Enables rapid iteration across multiple prompt configurations, voice models, and conversational logic pathways, allowing teams to optimize performance parameters through systematic A/B testing and comparative analysis frameworks.

- Ground Truth-Independent Automated Evaluation: Employs proprietary AI evaluation models that assess voice AI outputs without requiring pre-labeled datasets, utilizing advanced metrics including conversational coherence, emotional appropriateness, and contextual relevance scoring across 50+ built-in evaluation templates.

- Real-Time Production Observability and Monitoring: Provides continuous performance tracking with instant anomaly detection, enabling teams to identify drift, hallucinations, and performance degradation in live environments while maintaining detailed audit trails for compliance requirements.

- Comprehensive Safety and Bias Detection: Implements multi-layered guardrails including toxicity detection, bias identification, prompt injection prevention, and personally identifiable information (PII) filtering, ensuring responsible AI deployment across regulated industries.

How It Works

Simulate operates through a sophisticated four-stage evaluation cycle designed to provide comprehensive voice AI validation with minimal human intervention. The process begins with data ingestion, where teams upload their voice AI configurations, training datasets, or connect live production systems through supported integrations including Vapi, Retell, ElevenLabs, and custom telephony APIs.

Following data integration, teams configure evaluation parameters using Simulate’s visual interface or programmatic SDKs, defining custom metrics, performance thresholds, and safety guardrails specific to their use case requirements. The platform then launches thousands of concurrent AI agent simulations, each representing different user personas, conversation patterns, and environmental conditions, systematically testing every aspect of the voice AI system’s capabilities.

Throughout the simulation process, Simulate’s proprietary evaluation models analyze both audio quality and conversational effectiveness, generating detailed performance reports with actionable insights, root cause analysis, and specific optimization recommendations. The platform maintains continuous feedback loops, enabling iterative model refinement and automated performance optimization based on evaluation outcomes.

Use Cases

Simulate serves diverse applications across industries where voice AI reliability directly impacts business outcomes and customer satisfaction.

- Enterprise Customer Service Automation: Validates voice AI agents handling complex customer inquiries, ensuring accurate issue resolution, appropriate escalation protocols, and consistent brand voice across high-volume contact center operations while maintaining compliance with industry regulations and accessibility standards.

- Healthcare and Telemedicine Voice Interfaces: Provides rigorous testing for voice AI systems in medical environments where accuracy is critical, including appointment scheduling, symptom assessment, medication reminders, and patient information collection, ensuring HIPAA compliance and clinical accuracy standards.

- Financial Services Voice Banking: Enables comprehensive testing of voice AI applications handling sensitive financial transactions, account inquiries, fraud detection conversations, and regulatory compliance scenarios, ensuring security protocols and accurate transaction processing across diverse customer demographics.

- Autonomous Systems and Robotics Integration: Supports testing of voice interfaces in autonomous vehicles, smart home devices, and industrial robotics applications where clear, reliable communication is essential for safety and operational effectiveness in dynamic environments.

Pros \& Cons

Advantages

Simulate provides substantial competitive advantages for organizations deploying mission-critical voice AI applications at enterprise scale.

- Unprecedented Testing Speed and Coverage: Reduces voice AI testing timelines from weeks to hours while achieving comprehensive scenario coverage that would be impossible through manual testing, enabling faster time-to-market and more confident deployments across complex use cases.

- Production-Grade Accuracy and Reliability: Delivers enterprise-level evaluation precision with 99% accuracy rates and comprehensive error detection capabilities, significantly reducing post-deployment issues and customer satisfaction problems typically associated with inadequately tested voice AI systems.

- Comprehensive Multi-Modal Safety Framework: Integrates advanced safety guardrails including real-time bias detection, toxicity filtering, and prompt injection prevention, ensuring responsible AI deployment that meets regulatory requirements across highly regulated industries.

- Cost-Effective Quality Assurance at Scale: Eliminates the need for extensive manual QA teams while providing more thorough testing coverage, resulting in up to 90% reduction in evaluation overhead costs and 10x faster development iteration cycles for enterprise AI teams.

- Enterprise Integration and Compliance: Offers seamless integration with existing AI development workflows, OpenTelemetry observability standards, and comprehensive audit trails meeting enterprise security and compliance requirements including SOC 2 and GDPR standards.

Disadvantages

While Simulate provides comprehensive voice AI testing capabilities, certain considerations may affect adoption for specific use cases.

- Voice AI Specialization Focus: Platform optimization specifically targets voice and conversational AI applications, which may limit its applicability for teams requiring comprehensive multi-modal AI testing beyond audio and text interactions.

- Learning Curve for Advanced Configuration: While user-friendly for basic testing scenarios, maximizing the platform’s advanced evaluation capabilities and custom metric creation may require significant onboarding time for teams new to AI evaluation methodologies.

- Subscription Requirements for Full Feature Access: Advanced features including unlimited concurrent testing, enterprise integrations, and custom safety guardrail configuration require Pro or Enterprise tier subscriptions, potentially limiting accessibility for smaller development teams or proof-of-concept projects.

How Does It Compare?

In the competitive landscape of AI evaluation and testing platforms in 2025, Simulate by Future AGI occupies a unique position as the only platform specifically engineered for comprehensive voice AI testing, differentiating itself from broader AI evaluation tools and general observability platforms.

- Galileo vs Future AGI: Galileo provides a comprehensive AI evaluation platform with strong support for LLM testing, RAG systems, and agent workflows through its Luna Evaluation Suite and recently launched Agentic Evaluations. While Galileo offers excellent general-purpose AI evaluation capabilities, automated testing workflows, and enterprise-scale monitoring, it lacks Simulate’s specialized voice AI testing infrastructure and audio-first evaluation approach that analyzes actual voice quality, tone, and conversational dynamics.

- Arize AI vs Future AGI: Arize AI excels in ML observability and model performance monitoring with strong drift detection capabilities and comprehensive dashboard analytics through their Phoenix platform. Arize provides excellent support for traditional machine learning workflows and offers LLM observability features. However, Arize’s platform focuses primarily on post-deployment monitoring and general model performance tracking, lacking the specialized voice AI simulation capabilities and pre-deployment testing infrastructure that define Simulate’s value proposition.

- LangSmith vs Future AGI: LangSmith offers robust LLM application development and testing tools with deep integration into the LangChain ecosystem, providing excellent debugging capabilities and experiment tracking for language model applications. While LangSmith serves developers building LLM applications effectively, it does not provide the specialized voice AI testing, audio quality evaluation, or large-scale conversational simulation capabilities that make Simulate unique in the voice AI testing space.

- Patronus AI vs Future AGI: Patronus AI provides specialized evaluation tools for LLM safety, hallucination detection, and bias assessment with strong focus on responsible AI deployment. While Patronus offers valuable safety evaluation capabilities, its platform primarily addresses general LLM safety concerns rather than the specific challenges of voice AI testing, including audio quality analysis, conversational flow evaluation, and real-time voice interaction testing.

- Traditional Voice Testing Platforms vs Future AGI: Conventional voice testing solutions typically require manual testing processes, human evaluators, or basic transcript analysis tools that miss critical voice-specific quality metrics. These legacy approaches cannot achieve the scale, consistency, or comprehensive coverage that Simulate provides through its AI-powered testing infrastructure and audio-first evaluation methodology.

Future AGI’s Simulate uniquely addresses the voice AI testing gap by combining specialized audio evaluation, conversational AI simulation, and enterprise-scale testing infrastructure within a single platform, providing capabilities that no other evaluation platform currently offers for voice AI applications.

Final Thoughts

Future AGI’s Simulate represents a paradigm shift in voice AI quality assurance, addressing a critical infrastructure gap that has limited enterprise adoption of voice AI applications across industries. The platform’s unique combination of AI-powered testing agents, comprehensive audio evaluation capabilities, and enterprise-grade safety frameworks creates compelling value for organizations seeking to deploy reliable voice AI at scale while minimizing post-deployment risks and customer satisfaction issues.

The platform’s strength lies in its specialized focus on voice AI testing challenges that traditional evaluation tools cannot address, including audio quality analysis, conversational flow dynamics, and large-scale scenario simulation across diverse user demographics and environmental conditions. While subscription requirements for advanced features may present initial investment considerations, the platform’s demonstrated ability to reduce testing timelines by 90% while achieving 99% accuracy rates provides substantial return on investment for enterprise voice AI deployments.

For organizations serious about deploying mission-critical voice AI applications in customer service, healthcare, financial services, or autonomous systems, Simulate offers a mature, proven solution that effectively bridges the gap between AI development and reliable production deployment. The platform’s continued innovation and expanding enterprise customer base suggest strong potential for maintaining competitive advantage as voice AI adoption accelerates across industries, making it an essential tool for teams committed to shipping reliable, high-performing voice AI experiences.