Table of Contents

Overview

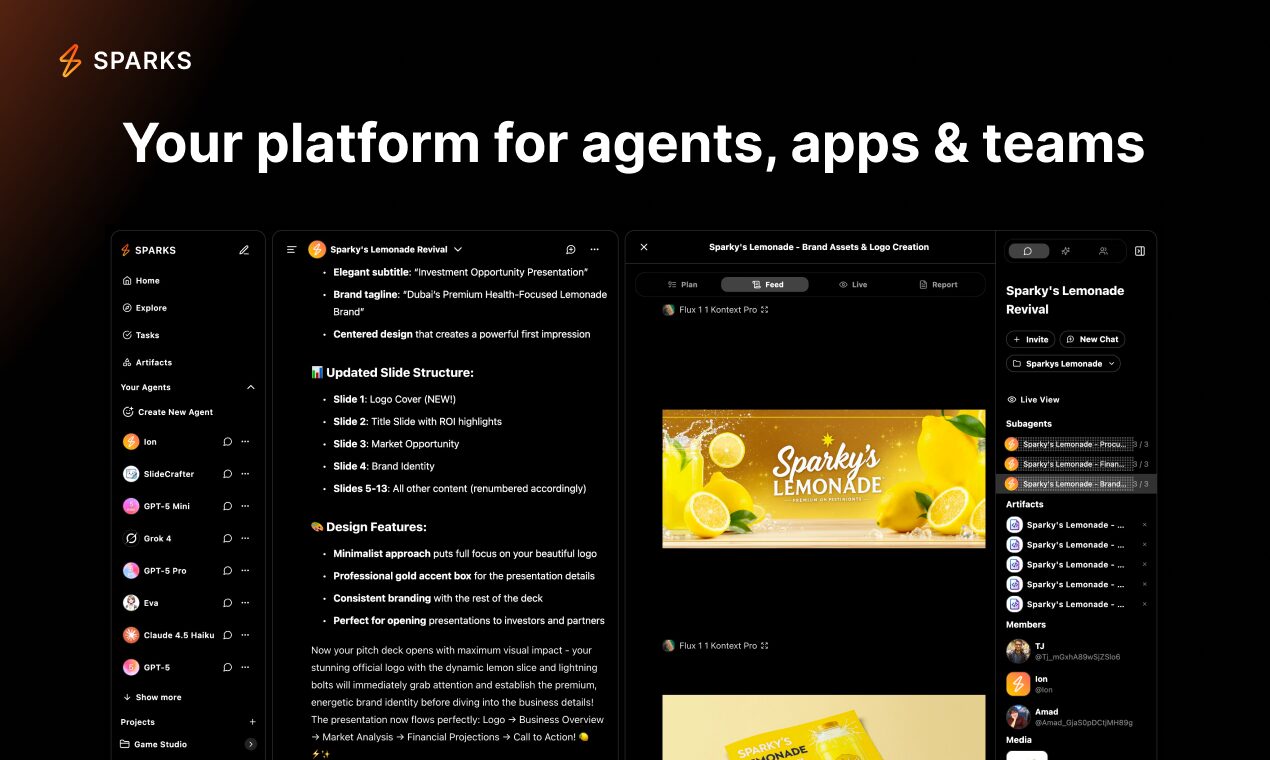

In the rapidly evolving landscape of no-code AI development platforms, Sparks AI positions itself as an all-in-one workspace and agent-building ecosystem designed to simplify the creation, deployment, and management of custom AI agents. Launched on Product Hunt on October 21, 2025, Sparks AI addresses a common pain point among developers and businesses: the complexity of orchestrating multiple tools, APIs, and subscriptions to build functional AI agents. The platform provides a unified environment where users can construct AI agents using templates, select from multiple leading language models including GPT-4o, Claude (Anthropic’s models), and Google Gemini, integrate specialized tools from a marketplace, and enable collaboration between human team members and multiple AI agents within shared project workspaces. Sparks AI’s distinguishing characteristic is its “Super-Agent” architecture enabling agents to autonomously spawn sub-agents for tackling complex, multi-step tasks—transforming workflows that traditionally required days into processes completing in minutes.

Key Features

Sparks AI delivers a comprehensive feature set designed to democratize AI agent development for both technical and non-technical users:

No-Code Agent Builder with Templates: The platform provides pre-built agent templates spanning research assistants, coding helpers, productivity tools, and specialized workflow automation. Users customize these templates by selecting preferred language models, configuring agent personalities, and defining behavioral parameters through intuitive interfaces without writing code.

Multi-LLM Support and Flexibility: Sparks AI integrates with multiple frontier language models including OpenAI’s GPT-4o and GPT-4 Turbo (note: despite marketing claims, GPT-5 is not publicly available as of October 2025), Anthropic’s Claude family (including Claude Sonnet 4), and Google’s Gemini models. This model-agnostic approach enables users to select optimal LLMs based on task requirements, cost considerations, and performance characteristics.

Tool and Plugin Marketplace: An integrated app store provides pre-built integrations and tools including web search capabilities, image generation, video generation, code execution environments, data analysis modules, API connectors, and custom knowledge base integration. Users expand agent capabilities by adding relevant tools from this marketplace.

Persistent Memory and Context Retention: Unlike stateless chatbots requiring repeated context provision, Sparks AI agents maintain persistent memory across conversations and sessions. This enables agents to reference previous interactions, learn user preferences, accumulate knowledge about projects, and provide continuity across extended workflows.

Super-Agent and Sub-Agent Spawning: The platform’s signature capability enables primary agents to analyze complex tasks, determine optimal decomposition strategies, and autonomously spawn specialized sub-agents to handle component pieces in parallel. For example, instructing an agent to “develop a business plan” triggers creation of dedicated sub-agents for market research, financial modeling, and brand strategy—each working simultaneously while coordinating through the parent agent.

Real-Time Collaboration and Shared Workspaces: Sparks AI provides synchronized group chat environments where human team members and multiple AI agents interact simultaneously. All conversations, artifacts, and project context remain synchronized in real-time, enabling collaborative workflows that blend human judgment with AI automation.

Agent Computer and Artifacts System: Agents operate within computational environments enabling code execution, data processing, and artifact generation. Users can review, edit, and refine outputs (artifacts) produced by agents, maintaining human oversight while benefiting from AI acceleration.

Public Agent Store and Community Marketplace: Beyond building custom agents, users can browse a marketplace of community-contributed agents addressing common use cases, download pre-configured agents for immediate deployment, and publish their own agents for others to utilize—creating network effects and knowledge sharing.

How It Works

Sparks AI operates through an integrated workflow combining agent configuration, task delegation, and collaborative execution. New users begin by either selecting pre-built agents from the Agent Store or creating custom agents from templates. The agent configuration interface guides users through selecting a base language model (GPT-4o, Claude, or Gemini), integrating relevant tools from the marketplace based on intended functions (web search for research agents, code execution for development assistants, etc.), and defining agent behavior through natural language instructions or structured prompts.

Once configured, agents deploy within the user’s workspace where they’re accessible through chat interfaces. Users describe desired outcomes in natural language—”research competitive landscape for SaaS accounting tools” or “generate Python code for data visualization dashboard”—and agents interpret requests, determine necessary steps, and execute workflows autonomously.

For complex, multi-faceted tasks, Sparks AI’s Super-Agent architecture analyzes requirements and determines when decomposition would improve efficiency. The system spawns specialized sub-agents, each assigned specific subtasks aligned with their configured capabilities. These sub-agents work in parallel or sequentially as appropriate, coordinating through the parent agent and synthesizing results into unified deliverables. Users observe this hierarchical execution in real-time through the interface, maintaining visibility into agent reasoning and progress.

The collaborative workspace dimension enables multiple human team members to interact with the same agents, share project context, and maintain conversation continuity. Agents remember previous interactions, reference earlier discussions, and build cumulative understanding of project goals and constraints across sessions.

The platform operates on a credit-based system where agent interactions, LLM API calls, and tool usage consume credits. The October 2025 launch offered 500 free credits for new sign-ups, with additional credits available through paid plans (specific pricing tiers remain unclear from available information, though the platform clearly has moved beyond “pricing TBD” status claimed in the original content).

Use Cases

Sparks AI addresses diverse scenarios spanning individual productivity, team collaboration, and organizational automation:

Custom Research and Analysis Agents: Users build specialized research assistants capable of web search, document analysis, data synthesis, and report generation. These agents can monitor competitive landscapes, track industry trends, aggregate news across sources, and compile comprehensive briefs—tasks traditionally requiring hours of manual information gathering completed autonomously in minutes.

Code Generation and Development Assistance: Technical teams create coding agents equipped with code execution environments, GitHub integration, and technical documentation access. These agents generate boilerplate code, debug existing implementations, write test suites, and provide pair-programming support, accelerating development cycles while maintaining human oversight for architectural decisions.

Multi-Agent In-App Automation Teams: Organizations integrate Sparks AI’s agent teams directly into business applications to automate complex internal workflows. For example, customer onboarding processes might employ agent teams where one sub-agent handles document collection, another performs compliance verification, a third generates personalized communications, and a coordinator agent orchestrates handoffs—replacing manual, error-prone processes with consistent automation.

Agent Marketplace Publishing and Monetization: Developers with specialized domain expertise create highly refined agents addressing specific market needs—legal document analysis, medical research synthesis, financial modeling—and publish them to Spark’s Agent Store. This enables monetization of AI agent development skills while providing end users access to professionally configured solutions without starting from scratch.

No-Code AI Application Prototyping: Product teams rapidly prototype AI-powered features and applications without engineering resources. Business analysts and product managers use Sparks AI to validate concepts, demonstrate functionality to stakeholders, and gather user feedback before committing development resources to full implementations—dramatically accelerating innovation cycles.

Cross-Functional Team Collaboration with AI Augmentation: Distributed teams use shared workspaces where both human members and AI agents contribute to projects. Marketing teams collaborate with content-generation agents, strategy teams work alongside analysis agents, and operations teams coordinate with automation agents—creating hybrid human-AI workflows that combine human creativity with AI scale.

Pros \& Cons

Advantages

Genuine No-Code Accessibility: Sparks AI successfully eliminates coding requirements for sophisticated AI agent deployment, making frontier AI capabilities accessible to business users, domain experts, and non-technical innovators who previously lacked pathways to leverage automation. The template-based approach with visual configuration dramatically lowers barriers compared to code-first platforms.

Model Flexibility and Future-Proofing: Unlike platforms locked to single LLM providers, Sparks AI’s support for OpenAI, Anthropic, and Google models provides hedging against vendor lock-in, enables optimal model selection per task, and ensures access to latest AI capabilities as providers release new versions. Users aren’t captive to a single provider’s pricing, performance, or policy changes.

Sophisticated Multi-Agent Architecture: The Super-Agent system enabling autonomous sub-agent spawning represents genuine architectural innovation beyond simple chatbot interfaces. This hierarchical task decomposition approach mirrors how human teams organize complex projects, providing scalability for intricate workflows that overwhelm single-agent systems.

Integrated Collaborative Environment: The unified workspace combining human chat, multiple AI agents, persistent memory, and synchronized project context eliminates the fragmentation plaguing workflows spanning multiple tools. Users avoid constant context-switching, redundant information provision, and integration complexity inherent in stitching together disparate platforms.

Community Marketplace Creating Network Effects: The Agent Store fostering community contribution and knowledge sharing accelerates ecosystem maturity. New users benefit from pre-built agents encoding best practices, experienced builders gain distribution channels, and the platform overall becomes more valuable as agent variety increases—classic positive network effects.

Disadvantages

Misleading GPT-5 Claims: The platform’s marketing prominently features “GPT-5” support despite GPT-5 not being publicly available as of October 2025. This represents either significant misinformation or premature commitment to future capabilities. The platform likely supports GPT-4o and GPT-4 Turbo, which while capable, creates credibility concerns when marketing makes verifiably false claims about available models.

Incomplete Pricing Transparency: The original content’s assertion that “Pricing TBD” contradicts evidence of established credit-based monetization (500 free credits launch offer indicates pricing structure exists). Prospective users face uncertainty about long-term costs, credit consumption rates per task type, and price-performance comparisons against competitors. This opacity complicates budgeting and ROI evaluation.

Very Early Stage with Limited Track Record: Having launched October 21, 2025, Sparks AI represents an extremely new entrant with minimal real-world deployment data, limited user testimonials, and unproven reliability at scale. The Product Hunt launch achieved modest traction without breakthrough visibility, suggesting market validation remains nascent. Early adopters assume heightened risks regarding platform stability, feature completeness, and long-term viability.

API Cost Pass-Through Model: Users must either provide their own API keys to external LLM providers or purchase platform credits covering API costs. This creates variable, potentially unpredictable expenses based on usage intensity, model selection (GPT-4 Turbo costs significantly more than GPT-3.5), and task complexity. Organizations accustomed to fixed SaaS subscriptions may struggle with budgeting against usage-based pricing.

Limited Documentation and Educational Resources: As a newly launched platform, comprehensive documentation, tutorial content, community forums, and educational materials remain sparse compared to mature competitors. New users face steeper learning curves, limited troubleshooting support, and reduced ecosystem resources for optimizing agent configurations and workflows.

Unclear Enterprise Features and Governance: Information regarding enterprise-critical capabilities—SSO/SAML authentication, role-based access controls, audit logging, data residency options, compliance certifications (SOC 2, HIPAA, GDPR), and SLAs—remains unclear. Enterprise buyers require detailed security, compliance, and operational assurances absent from current marketing materials.

How Does It Compare?

The no-code AI agent builder market in October 2025 features diverse platforms each optimizing for different user profiles, technical sophistication levels, and deployment scenarios:

Relevance AI: Represents a mature, enterprise-focused platform enabling autonomous AI agent and multi-agent team development for business process automation across sales, marketing, support, research, and operations. Relevance AI emphasizes production-ready reliability with SOC 2 Type II certification, GDPR compliance, and flexible data storage across multiple regions. The platform provides pre-built specialized agents (like Bosh the Sales Agent for prospect engagement and meeting scheduling) alongside custom agent builders. Relevance AI targets organizations prioritizing security, compliance, and enterprise governance over bleeding-edge features. Compared to Sparks AI’s very recent launch, Relevance AI offers greater maturity, established customer deployments, and proven scalability—though potentially less flexibility in model selection and fewer experimental features.

Flowise: An open-source platform providing visual, low-code LLM application development through drag-and-drop node-based workflows. Flowise supports over 100 integrations including LangChain, LlamaIndex, OpenAI, and local models, enabling sophisticated AI agent orchestration while maintaining code transparency and customizability inherent in open-source projects. The platform appeals to developers comfortable with technical concepts who value open architecture, local deployment options, and freedom from vendor lock-in. Flowise differs fundamentally from Sparks AI in licensing model (open-source vs. commercial), target user (developers vs. business users), and deployment philosophy (self-hosted flexibility vs. managed service convenience). Users prioritizing transparency, customization, and control prefer Flowise; those seeking polished user experiences and managed infrastructure favor Sparks AI.

Lindy: A comprehensive no-code platform focused specifically on business automation through AI agents handling meeting scheduling, lead qualification, note summarization, and workflow management across teams. Lindy emphasizes accessibility with a free tier providing 400 credits monthly and paid plans under \$50, making it budget-friendly for small teams testing AI automation. The platform integrates with 3,000+ applications, embedding agents seamlessly into existing CRM, marketing, and operations tools. Lindy’s strength lies in pre-built workflows optimized for common business processes rather than general-purpose agent building. Compared to Sparks AI’s flexible, multi-purpose architecture, Lindy trades configurability for immediate, business-process-specific value with minimal setup.

Zapier AI (Zapier Central): Extends Zapier’s automation platform into AI agent territory by enabling users to create and manage bots automating tasks across Zapier’s ecosystem of 6,000+ integrated applications. Zapier AI leverages the company’s massive integration library, making it exceptionally powerful for users already embedded in Zapier workflows. The platform emphasizes familiar, accessible interfaces for non-technical users automating repetitive tasks. Zapier AI competes with Sparks AI primarily on integration breadth and platform maturity rather than sophisticated multi-agent coordination. Users needing broad app connectivity across established business tools favor Zapier AI; those prioritizing advanced agent intelligence and collaboration capabilities prefer Sparks AI.

Voiceflow: Specializes in building conversational AI experiences including chatbots and voice assistants for platforms like Alexa, Google Assistant, websites, and mobile apps. Voiceflow emphasizes voice-first design, multi-language support, and multi-modal capabilities (voice + visual). The platform targets companies creating customer-facing conversational interfaces rather than internal automation or research agents. Voiceflow and Sparks AI serve largely non-overlapping use cases—Voiceflow for customer engagement and conversational UI, Sparks AI for internal productivity and complex task automation.

n8n and Make (formerly Integromat): These visual automation platforms provide workflow building through node-based interfaces connecting hundreds of services and APIs. Both support AI integrations but function primarily as general-purpose automation platforms rather than AI-first agent builders. They appeal to technically proficient users comfortable with API concepts, webhooks, and data transformation. While capable of incorporating AI via integrations, they lack Sparks AI’s agent-centric architecture, persistent memory, and autonomous sub-agent spawning. Users seeking flexible, general-purpose automation with AI as one component among many choose n8n or Make; those wanting AI agents as primary actors prefer platforms like Sparks AI.

Stack AI, LangFlow, and Botpress: These platforms represent middle-ground solutions combining visual builder interfaces with greater technical depth than pure no-code tools. Stack AI provides no-code agent building with extensive LLM provider support and data source connectivity. LangFlow focuses specifically on LangChain-based application development with visual workflow design. Botpress targets enterprise chatbot and conversational AI development with open-source foundations and strong customization options. Each offers more technical control than Sparks AI while remaining more accessible than code-first frameworks—appealing to teams with some technical capacity seeking balance between ease-of-use and customization depth.

SmythOS: Positions itself as a comprehensive platform combining visual no-code design with advanced capabilities including extensive deployment options, multi-agent orchestration, and enterprise security controls. SmythOS emphasizes flexibility in deployment (cloud, on-premise, hybrid), robust monitoring and debugging tools, and API-first architecture enabling programmatic control alongside visual building. The platform targets organizations requiring enterprise-grade features while maintaining accessibility for non-developers. SmythOS competes directly with Sparks AI in the sophisticated multi-agent orchestration space, differentiated by greater deployment flexibility and established enterprise feature set versus Sparks AI’s newer, potentially more experimental approach.

Sparks AI occupies a specific position emphasizing unified workspace collaboration, flexible LLM selection, hierarchical multi-agent task decomposition, and community marketplace network effects. Its primary competitive advantages include the Super-Agent sub-agent spawning architecture, integrated human-AI collaborative environment, and model-agnostic approach avoiding vendor lock-in. However, users must carefully weigh these strengths against significant limitations: misleading GPT-5 marketing claims undermining credibility, very recent launch creating early-adopter risks, unclear pricing models complicating cost assessment, and limited enterprise security/compliance documentation. Organizations should conduct thorough proof-of-concept evaluations comparing Sparks AI against more mature alternatives based on specific use cases, security requirements, budget constraints, and risk tolerance before committing to production deployments.

Final Thoughts

Sparks AI enters the increasingly crowded no-code AI agent builder market with genuinely innovative features—particularly its hierarchical Super-Agent architecture enabling autonomous sub-agent spawning for complex task decomposition—that differentiate it from simpler chatbot platforms and single-agent systems. The unified collaborative workspace combining human team members with multiple AI agents addresses real workflow fragmentation pain points, while model flexibility across OpenAI, Anthropic, and Google providers future-proofs against vendor lock-in inherent in single-LLM platforms.

However, prospective users and evaluators must navigate several significant caveats and inaccuracies in the platform’s positioning. Most critically, the prominent marketing claim of “GPT-5” support is demonstrably false—GPT-5 is not publicly available as of October 2025, and the platform likely supports GPT-4o and GPT-4 Turbo. This misrepresentation raises serious credibility concerns about marketing accuracy and whether other claimed capabilities similarly overstate actual functionality. Organizations making purchasing decisions based on GPT-5 availability would be materially misled.

Additionally, the original content’s assertion that “Pricing TBD” contradicts available evidence of established credit-based monetization, including the 500 free credits launch offer. While exact pricing tiers, credit consumption rates, and cost-per-task remain opaque, a pricing structure clearly exists. This lack of transparency complicates financial planning, prevents accurate ROI modeling, and hinders comparison against competitors with published pricing. Organizations require clear cost visibility to budget appropriately and assess whether Sparks AI delivers value commensurate with investment.

The platform’s October 21, 2025 launch date means Sparks AI represents an extremely young product with minimal production deployment track record, limited user testimonials, sparse documentation, and unproven scalability. The Product Hunt launch achieved modest reception without breakthrough traction, suggesting market validation remains early-stage. Early adopters assume heightened risks regarding feature completeness, platform stability, support responsiveness, and long-term viability. Mission-critical applications and risk-averse organizations should defer adoption pending greater market maturity.

The competitive landscape analysis benefits from recognition of significant platforms absent from the original comparison. Tools like Lindy (business-process-focused automation), Zapier AI (6,000+ app integrations), n8n (open-source workflow automation), Voiceflow (conversational AI specialists), Stack AI, LangFlow, Botpress, and SmythOS each serve distinct market segments with proven capabilities, established user bases, and clear differentiation. Prospective users should evaluate Sparks AI against this broader competitive set rather than the limited subset presented in original marketing materials.

The comparison with competitors reveals important positioning nuances. Platforms like Relevance AI offer greater enterprise maturity with SOC 2 certification and GDPR compliance for organizations prioritizing governance. Open-source alternatives like Flowise provide transparency and customization for technically proficient teams. Integration-rich platforms like Zapier AI deliver immediate business value through existing workflow connectivity. Sparks AI’s differentiation lies primarily in its Super-Agent multi-agent architecture and unified collaborative workspace rather than superiority across all dimensions.

Ideal Sparks AI users include innovative teams comfortable with early-stage platforms willing to tolerate documentation gaps and potential instability in exchange for access to cutting-edge multi-agent capabilities, organizations requiring model flexibility to hedge against single-vendor risk, and collaborative teams benefiting from unified human-AI workspaces eliminating tool fragmentation. The platform is less suitable for enterprise buyers requiring comprehensive security certifications and compliance documentation, risk-averse organizations needing proven stability and established support, teams demanding transparent, predictable pricing for accurate budgeting, or users expecting GPT-5 capabilities based on misleading marketing claims.

For those considering Sparks AI, rigorous proof-of-concept evaluation is essential. Leverage the 500 free credits to test platform capabilities against specific use cases, validate whether model selection (GPT-4o, Claude, Gemini) meets performance requirements given GPT-5 unavailability, assess credit consumption rates for representative tasks to estimate ongoing costs, evaluate documentation adequacy and support responsiveness, and conduct side-by-side comparisons with more mature alternatives like Relevance AI, Lindy, or Zapier AI to determine whether Sparks AI’s unique features justify early-adopter risks.

Sparks AI demonstrates promising technical innovation in multi-agent orchestration and collaborative AI workspaces, representing genuine advancement in no-code agent development. However, realizing this potential requires addressing credibility issues from false GPT-5 claims, providing transparent pricing enabling informed financial decisions, expanding documentation and support infrastructure to match user needs, and building deployment track record proving production readiness. Until these fundamentals mature, Sparks AI remains best suited for experimental projects and innovative early adopters rather than mission-critical deployments requiring proven reliability, comprehensive support, and transparent economics.