Table of Contents

Overview

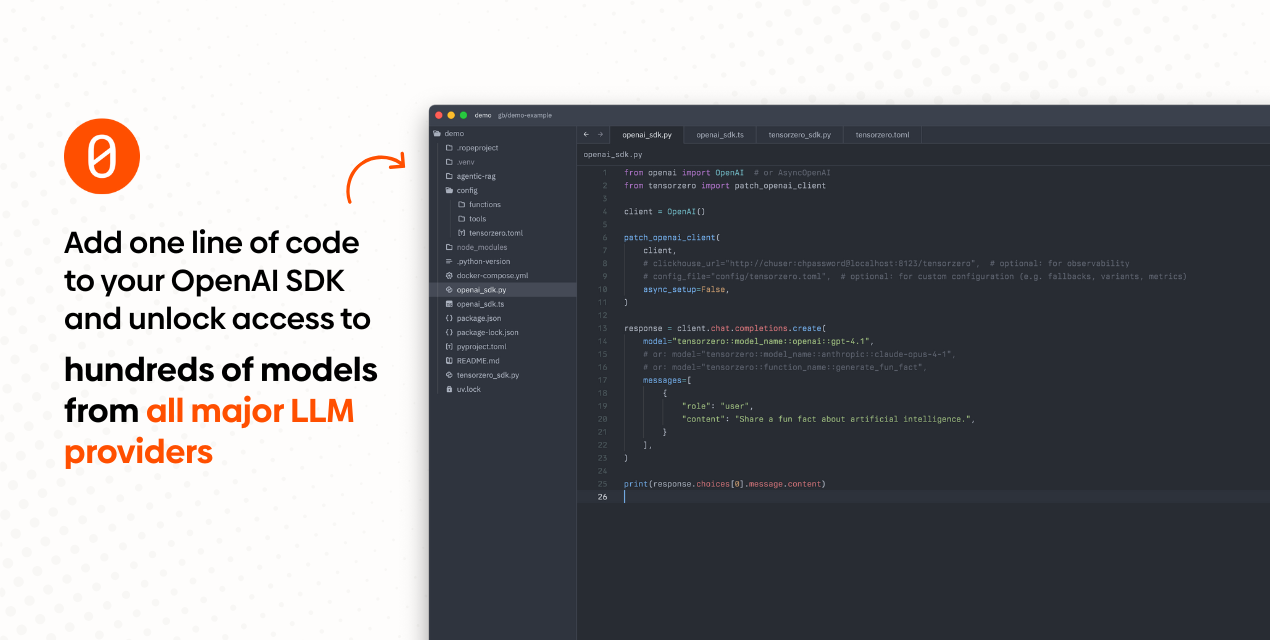

In the rapidly expanding field of LLM application development, TensorZero emerges as a comprehensive open-source infrastructure platform designed to bridge the gap between prototype and production-ready systems. As organizations move beyond basic LLM integrations toward sophisticated, data-driven AI applications, TensorZero provides a unified stack that transforms development metrics and human feedback into continuously improving models. With recent \$7.3 million seed funding and growing adoption across enterprises, TensorZero addresses the critical need for production-grade LLM infrastructure that combines performance, observability, and optimization in a single cohesive platform.

Key Features

TensorZero provides a comprehensive suite of production-ready tools designed to optimize the entire LLM application lifecycle:

- High-Performance Gateway: Access multiple LLM providers through a unified API built in Rust, achieving sub-millisecond latency overhead at enterprise scale with support for streaming, structured outputs, and multimodal inference.

- Comprehensive Observability: Store inference data and feedback in your own ClickHouse database with real-time monitoring, programmatic access, and built-in UI for tracking performance across your entire LLM application ecosystem.

- Advanced Evaluation Framework: Benchmark individual inferences and end-to-end workflows using both heuristic evaluations and LLM judges, with support for custom evaluation metrics and automated testing pipelines.

- Experimentation and A/B Testing: Deploy with confidence using built-in randomized controlled trials for models, prompts, providers, and hyperparameters, enabling data-driven decision making across your LLM infrastructure.

- Model Optimization Capabilities: Leverage production data for supervised fine-tuning, preference optimization (DPO), and automated prompt engineering using techniques like MIPROv2, turning operational metrics into model improvements.

- Production-Grade Infrastructure: GitOps-friendly configuration management, automatic retries and fallbacks, load balancing, and enterprise security features designed for complex organizational deployments.

How It Works

TensorZero operates as a comprehensive data and learning flywheel for LLM applications, designed to evolve your AI systems based on real-world usage patterns. Organizations begin by integrating TensorZero’s unified gateway API, which consolidates access to multiple LLM providers while maintaining consistent interfaces and performance standards. The platform automatically captures inference data, user feedback, and performance metrics in a structured ClickHouse database, providing both real-time observability and historical analytics.

As data accumulates, TensorZero’s optimization recipes analyze patterns to identify improvement opportunities across prompts, models, and inference strategies. The platform supports both automated optimizations and manual experimentation through A/B testing frameworks, enabling teams to validate changes before full deployment. This creates a continuous improvement cycle where production experience directly informs model performance, making applications smarter, faster, and more cost-effective over time.

Use Cases

TensorZero serves organizations across the spectrum of LLM application maturity, from early-stage startups to enterprise-scale deployments:

- Enterprise LLM Application Optimization: Enable large organizations to systematically improve customer service chatbots, internal knowledge management systems, and automated content generation platforms through data-driven optimization and comprehensive monitoring.

- AI-First Startup Infrastructure: Provide Series A to Series B startups with production-ready LLM infrastructure that scales with growth, eliminating the need to rebuild systems as user bases expand and requirements become more complex.

- Research and Development Environments: Support ML teams and research organizations in conducting systematic prompt engineering experiments, model comparisons, and performance evaluations with reproducible results and comprehensive data tracking.

- Financial Services AI Applications: Meet stringent compliance and security requirements with self-hosted infrastructure while optimizing AI-powered risk assessment, fraud detection, and automated customer communications.

- Healthcare and Regulated Industries: Deploy HIPAA-compliant LLM applications with local data processing, audit trails, and systematic performance monitoring for clinical decision support and patient communication systems.

Advantages and Considerations

Strengths

- Production-Grade Performance: Rust-based architecture delivers sub-millisecond latency overhead at 10,000+ QPS, significantly outperforming Python-based alternatives while maintaining enterprise reliability standards.

- Comprehensive Data Strategy: Unlike fragmented toolchains, TensorZero provides end-to-end data collection and optimization workflows, ensuring all inference data contributes to continuous improvement rather than being lost.

- Enterprise Security and Compliance: Fully self-hosted solution with local data processing, audit trails, and GitOps configuration management, meeting stringent enterprise security and regulatory requirements.

- Provider Flexibility: Avoid vendor lock-in with support for 15+ major LLM providers plus any OpenAI-compatible API, enabling dynamic routing and cost optimization across multiple services.

- Open-Source Transparency: Complete platform transparency with active community development, enabling organizations to understand, modify, and extend capabilities without proprietary service dependencies.

Limitations and Considerations

- Initial Setup Complexity: While quickstart guides exist, production-grade deployments require infrastructure expertise, Docker orchestration, and database management capabilities.

- Resource Requirements: ClickHouse data warehouse and high-performance gateway require dedicated infrastructure resources, representing ongoing operational costs beyond basic LLM API expenses.

- Learning Curve for Advanced Features: Optimization techniques like RLHF and automated prompt engineering require ML expertise to implement effectively, though UI tools reduce technical barriers.

- Community and Ecosystem Maturity: As a relatively new platform, TensorZero has a smaller community and fewer third-party integrations compared to established frameworks, though growth is accelerating rapidly.

How Does It Compare? (Updated for 2025)

The LLMOps infrastructure landscape has evolved significantly, with TensorZero competing in a market increasingly focused on production-grade, data-driven optimization rather than basic prototyping tools.

Modern LLMOps Infrastructure

Weights \& Biases (W\&B) provides comprehensive ML experiment tracking and model management with strong LLM support, excelling in research environments and model development workflows. However, W\&B focuses more on training and experimentation rather than production inference optimization, making TensorZero complementary for deployment scenarios.

MLflow offers open-source ML lifecycle management with growing LLM capabilities, providing model versioning and deployment tools. While MLflow handles model management effectively, TensorZero provides superior real-time inference optimization and feedback integration specifically designed for conversational AI applications.

Langfuse specializes in LLM observability and analytics with excellent prompt tracking and cost management features. TensorZero differentiates through its comprehensive optimization capabilities and high-performance gateway, while Langfuse focuses primarily on monitoring and analytics without the inference-time optimization features.

Arize provides ML observability and monitoring with strong drift detection capabilities, serving enterprises requiring comprehensive model performance monitoring. TensorZero’s advantage lies in its unified approach combining high-performance inference with optimization, rather than separate monitoring and deployment solutions.

Development Frameworks and Prototyping Tools

Compared to LangChain and Haystack, TensorZero addresses production deployment challenges that emerge after successful prototyping. While these frameworks excel at rapid development and diverse integrations, TensorZero provides the infrastructure needed to scale, monitor, and optimize applications in production environments.

LiteLLM serves as a lighter-weight unified API solution but lacks TensorZero’s comprehensive optimization and observability features. Benchmark testing shows TensorZero achieving 25-100x better latency performance while handling 100x more throughput, making it suitable for high-scale production deployments.

Cloud-Native AI Platforms

Major cloud providers offer managed AI services, but TensorZero addresses organizations requiring self-hosted solutions for data privacy, customization, or cost optimization. The platform’s open-source nature provides transparency and control that managed services cannot match, particularly important for regulated industries.

Enterprise Positioning

For organizations serious about production LLM applications, TensorZero’s comprehensive approach to infrastructure, optimization, and observability provides significant advantages over fragmented toolchains. The platform’s focus on continuous improvement through production data creates sustainable competitive advantages that accumulate over time, making it particularly valuable for companies building AI-driven products rather than simple integrations.

Final Thoughts

TensorZero represents a mature approach to LLM infrastructure, addressing the critical gap between prototype development and production-scale deployment. The platform’s emphasis on data-driven optimization, combined with enterprise-grade performance and security features, positions it as a compelling solution for organizations seeking to build sustainable competitive advantages through AI technology.

The platform’s recent funding and growing adoption reflect market recognition of the need for comprehensive LLMOps infrastructure beyond basic API gateways. For engineering teams responsible for production LLM applications, TensorZero’s unified approach to inference, observability, and optimization offers significant operational advantages over fragmented toolchains, particularly in environments where performance, security, and continuous improvement are paramount.

While the platform requires more initial setup than simpler alternatives, this complexity reflects TensorZero’s focus on solving real production challenges rather than demo scenarios. Organizations willing to invest in proper infrastructure setup will find TensorZero provides a robust foundation for scaling LLM applications while maintaining the visibility and control necessary for continuous optimization and enterprise compliance requirements.