Table of Contents

Overview

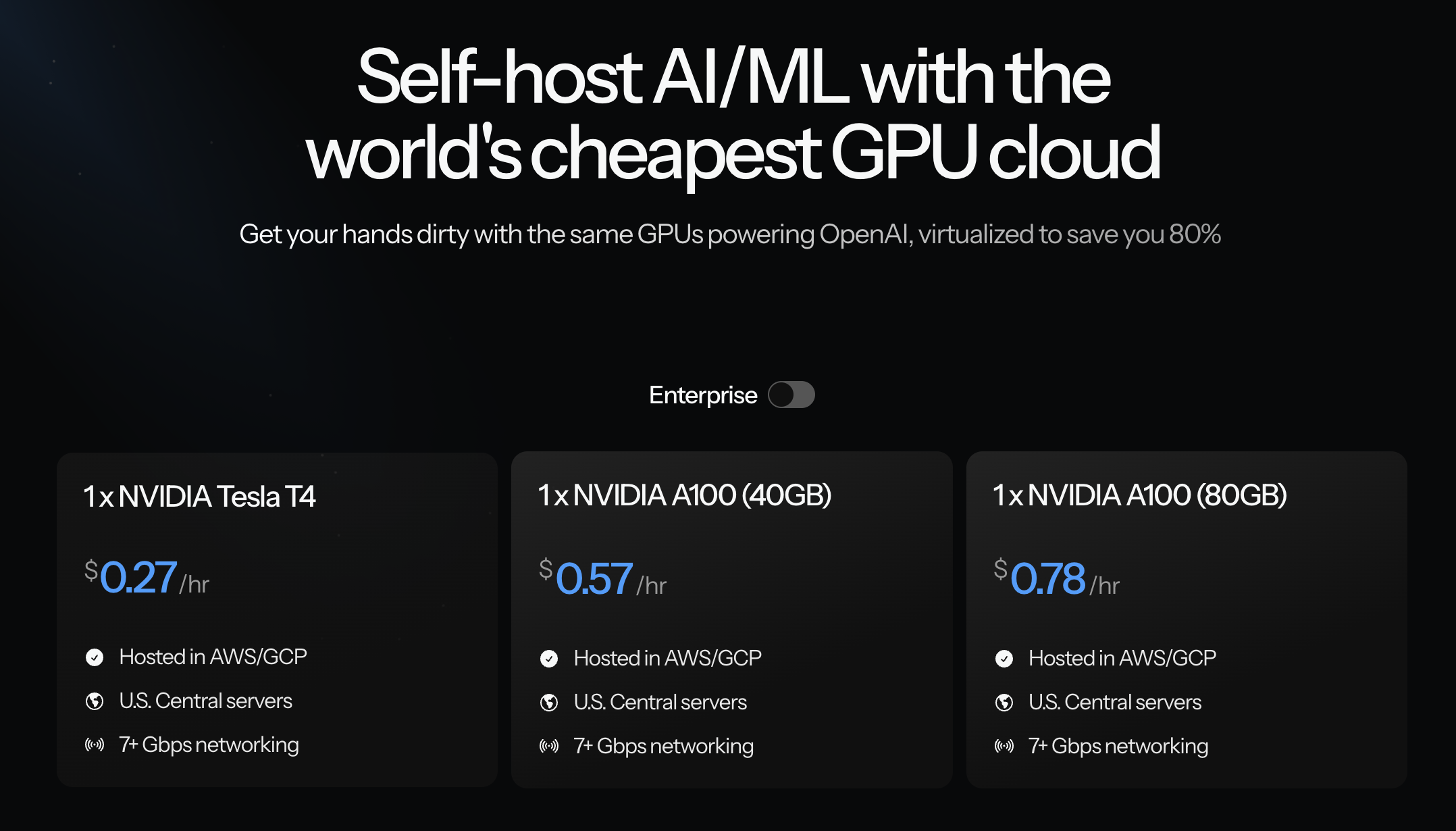

In the rapidly evolving landscape of Artificial Intelligence and Machine Learning, access to powerful GPU resources is paramount. But the high costs associated with traditional cloud providers can be a significant barrier. Enter Thunder Compute, a promising solution that offers high-performance, low-cost GPU cloud infrastructure specifically optimized for AI/ML workloads. Let’s dive into what makes Thunder Compute a compelling option for AI practitioners.

Key Features

Thunder Compute boasts a range of features designed to streamline AI/ML development and deployment:

- Virtualized GPU infrastructure: This allows for efficient resource utilization and cost optimization.

- High-performance AI/ML workloads: Engineered to handle demanding computational tasks with ease.

- Up to 40% cost savings: A significant advantage over traditional cloud providers, making AI development more accessible.

- Quick deployment: Get your GPU instances up and running rapidly, minimizing setup time.

- Scalable clusters: Easily scale your resources to meet the growing demands of your AI projects.

- Access to OpenAI-grade GPUs: Leverage the same powerful GPUs used by leading AI research organizations.

How It Works

Thunder Compute simplifies GPU resource management. Users select their desired GPU configuration through the platform’s intuitive interface. Once selected, Thunder Compute automatically launches virtualized GPU instances. The system handles all the complexities of provisioning, scaling, and performance optimization, significantly reducing manual setup and operational overhead. This allows users to focus on their AI models and algorithms, rather than wrestling with infrastructure.

Use Cases

Thunder Compute’s capabilities make it suitable for a wide array of AI and high-performance computing applications:

- AI model training: Accelerate the training process of complex AI models.

- Machine learning experimentation: Rapidly iterate on different model architectures and hyperparameters.

- Generative AI workloads: Power the creation of images, text, and other generative content.

- High-performance computing (HPC): Tackle computationally intensive tasks in scientific research and engineering.

- Research simulations: Conduct complex simulations in fields like physics, chemistry, and biology.

Pros & Cons

Like any technology, Thunder Compute has its strengths and weaknesses. Let’s break them down:

Advantages

- Cost-effective GPU access: Significantly reduces the financial burden of AI development.

- High performance: Delivers the computational power needed for demanding AI workloads.

- Easy setup: Streamlines the deployment process, saving time and effort.

- Scalable resources: Adapts to the changing needs of your AI projects.

- Uses latest GPU hardware: Provides access to cutting-edge technology.

Disadvantages

- Limited to cloud-native use cases: Primarily designed for cloud-based deployments.

- Still in early-stage development: May have fewer features and less community support compared to more established platforms.

How Does It Compare?

When considering GPU cloud providers, it’s important to understand the competitive landscape. Lambda Labs is a more established player, but often comes with a higher price tag. RunPod offers similar pricing to Thunder Compute, but may not be as optimized for scalability. Vast.ai provides a broader network of GPUs, but lacks the virtualization efficiency that Thunder Compute offers. Thunder Compute distinguishes itself by balancing performance, cost-effectiveness, and ease of use.

Final Thoughts

Thunder Compute presents a compelling option for AI developers and researchers seeking affordable and high-performance GPU resources. While it’s still in its early stages, its focus on virtualization and cost optimization makes it a strong contender in the competitive GPU cloud market. If you’re looking to accelerate your AI projects without breaking the bank, Thunder Compute is definitely worth considering.