Table of Contents

Overview

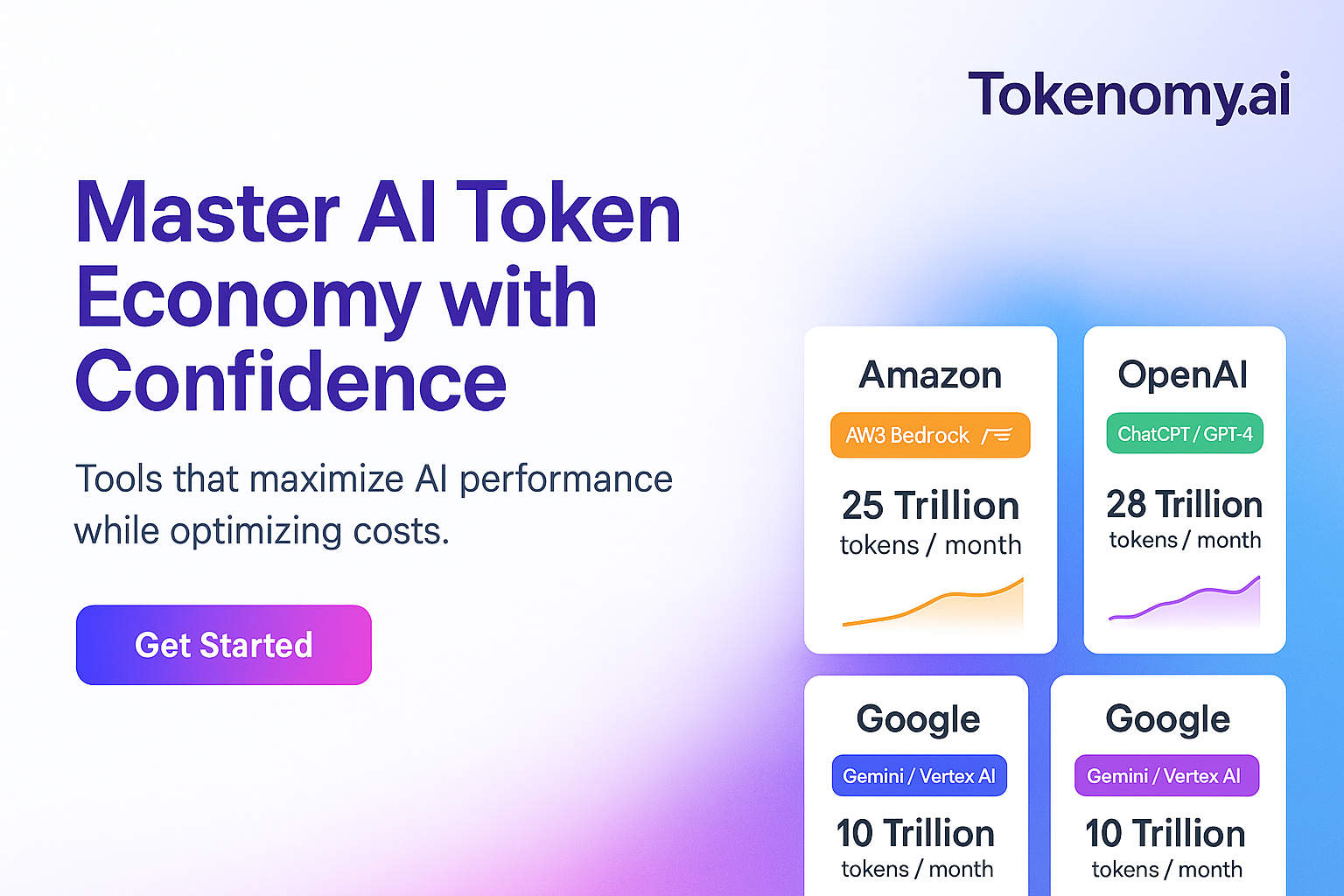

In the rapidly evolving world of AI, managing the costs associated with large language models (LLMs) is becoming increasingly crucial. Enter Tokenomy.ai, a powerful tool designed to help developers estimate token usage and costs for LLM APIs like GPT-4o and Claude before they even make a call. This innovative platform integrates seamlessly into your existing workflow, providing predictive insights and cost-saving tips to help you budget and deploy AI applications efficiently. Let’s dive into what makes Tokenomy.ai a game-changer for AI development teams.

Key Features

Tokenomy.ai boasts a range of features designed to optimize your LLM usage and keep costs under control:

- Predicts token and cost estimates before API calls: Get a clear understanding of potential costs before running your prompts, preventing unexpected expenses.

- Supports GPT-4o, Claude, and other LLMs: Tokenomy.ai is compatible with leading LLMs, ensuring broad applicability for your AI projects.

- VS Code sidebar integration: Seamlessly integrate Tokenomy.ai into your VS Code environment for real-time feedback and cost analysis.

- CLI and LangChain callback tools: Extend Tokenomy.ai’s functionality with CLI tools and LangChain callbacks, providing flexibility and control.

- Real-time cost-saving suggestions: Receive actionable recommendations to optimize your prompts and reduce token consumption.

How It Works

Tokenomy.ai simplifies the process of estimating token usage and costs. The platform analyzes user prompts through IDE plugins (like the VS Code sidebar) or CLI tools. It then estimates token consumption for supported LLMs based on the prompt. Finally, it surfaces potential costs along with suggestions for optimization. For those using LangChain, Tokenomy.ai integrates seamlessly, providing real-time feedback during agent runs to help you stay within budget.

Use Cases

Tokenomy.ai is a versatile tool that can benefit a variety of AI development teams:

- AI development teams estimating API usage: Accurately predict API costs during development, enabling better budgeting and resource allocation.

- LLM engineers managing costs in production: Monitor and optimize LLM usage in real-time to prevent cost overruns and ensure efficient operation.

- Teams using LangChain or VS Code for prompt engineering: Integrate Tokenomy.ai into your existing workflow for seamless cost analysis and optimization during prompt development.

Pros & Cons

Like any tool, Tokenomy.ai has its strengths and weaknesses. Let’s take a look at the pros and cons:

Advantages

- Real-time cost feedback: Get immediate insights into potential costs and optimization opportunities.

- Integrates with developer tools: Seamlessly integrate with VS Code, CLI tools, and LangChain for a streamlined workflow.

- Prevents cost overruns: Proactively manage your LLM usage and avoid unexpected expenses.

Disadvantages

- Limited to supported models: Currently only supports specific LLMs, which may not cover all use cases.

- Predictive accuracy may vary: While generally accurate, predictions are estimates and may vary depending on the complexity of the prompt and the specific LLM.

How Does It Compare?

While other tools offer some overlapping functionality, Tokenomy.ai stands out for its predictive capabilities. For example, PromptLayer offers logging but provides less predictive insight into token usage and costs. Langfuse tracks usage but lacks the pre-call cost estimation that Tokenomy.ai excels at. This proactive approach sets Tokenomy.ai apart as a valuable tool for cost-conscious AI developers.

Final Thoughts

Tokenomy.ai offers a compelling solution for managing the costs associated with LLM APIs. Its real-time feedback, seamless integration with popular developer tools, and proactive cost estimation make it a valuable asset for AI development teams looking to optimize their workflows and prevent budget overruns. While it has some limitations in terms of model support and predictive accuracy, the benefits of Tokenomy.ai far outweigh the drawbacks for many users.