Table of Contents

Overview

As businesses increasingly deploy AI agents, the challenge shifts from creation to control. Managing thousands of agents, ensuring policy compliance, and keeping costs in check can quickly become an operational nightmare. Enter TrueFoundry’s AI Gateway, a production-ready control plane designed to help you experiment with, monitor, and govern your entire agent ecosystem. Launched on Product Hunt on December 2, 2025, and achieving 226 upvotes with 157 upvotes tracked, the platform has demonstrated market validation. The tool is already trusted in production for thousands of agents by multiple Fortune 100 companies, providing the critical infrastructure for scaling AI responsibly and efficiently across cloud, on-premises, or VPC deployments.

Key Features

TrueFoundry’s AI Gateway packs a suite of powerful features designed to give you complete command over your AI operations. Here are some of the highlights:

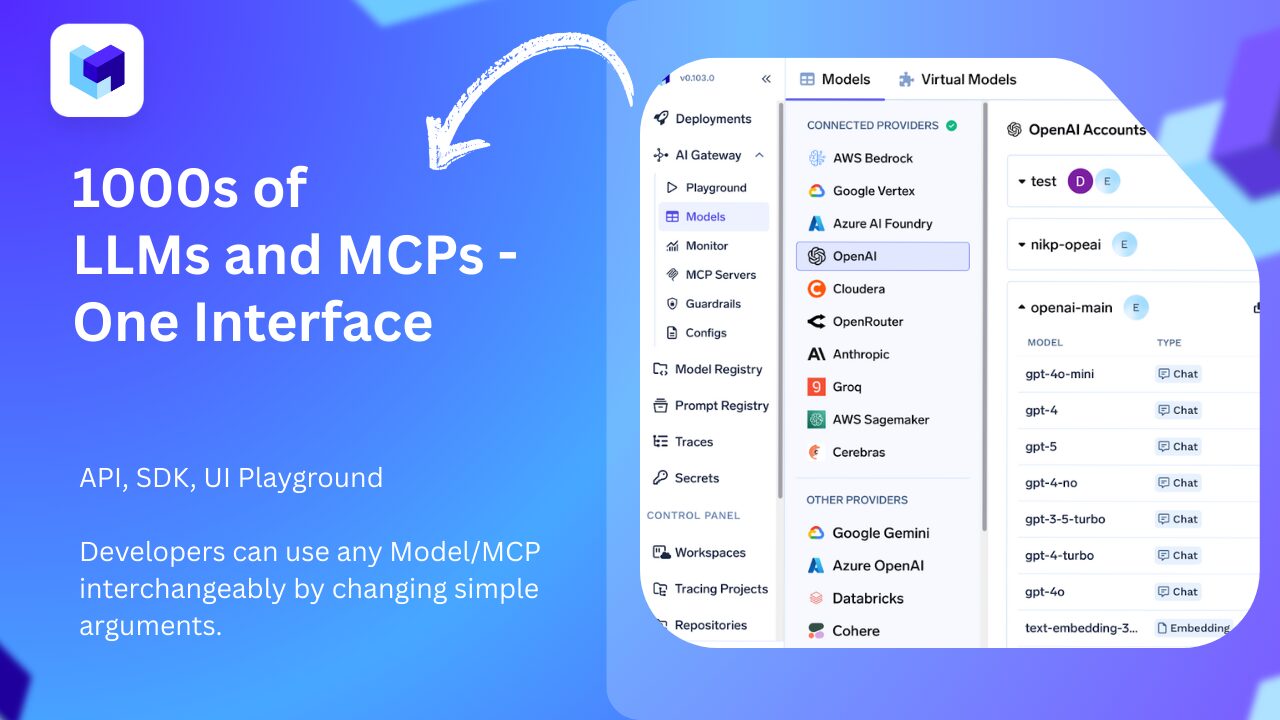

- Centralized Playground for Components: Experiment and connect all your agent components—including 250+ LLM models, MCP (Model Context Protocol) servers, Guardrails, Prompts, and Agents—in a single, unified environment before deploying to production. The playground generates production-ready code snippets for OpenAI, LangChain, and other frameworks.

- Full Visibility with Traces \& Metrics: Gain complete, real-time visibility over agent responses. The gateway provides detailed traces and health metrics with granular monitoring of token usage, latency, error rates, and request volumes, making it easy to debug issues and monitor performance across users, models, or custom metadata.

- Cost \& Volume Guardrails: Take control of your spending and usage. Set up granular rules and limits on request volumes, token consumption, and overall cost to prevent unexpected bills and ensure resource stability. The system supports budgets that automatically throttle or downgrade requests when thresholds are exceeded.

- Model \& Prompt Management: Centrally manage and version your prompts and models through a dedicated Prompt Engineering Studio. This ensures consistency, simplifies updates, and allows for systematic A/B testing and optimization across all your agents with full version history.

- MCP Gateway Integration: Native support for Model Context Protocol enables seamless integration with tools like Slack, GitHub, and other enterprise systems with centralized security-driven management and audit trails.

- Enterprise Security \& Governance: SOC 2, HIPAA, and GDPR compliant with RBAC, API key management, rate limiting, and configurable guardrails to ensure PII handling and alignment with safety policies. Deploy on-premises or in VPC for complete data sovereignty.

- Low Latency Performance: Sub-3ms internal latency with intelligent routing, automated failovers, load balancing, and 99.99% uptime even during third-party model outages.

How It Works

Understanding how the AI Gateway operates is key to appreciating its value. It functions as a sophisticated middleware layer that sits between your applications and the various AI models and services you use.

The process is straightforward: Developers use the integrated playground to connect their chosen models from 250+ options (OpenAI, Anthropic, Gemini, Groq, Mistral, AWS Bedrock, Google Vertex AI, Azure OpenAI, self-hosted models), craft specific prompts, and configure safety guardrails. Once this agent flow is designed, it’s deployed through the gateway with a unified OpenAI-compatible endpoint. From that point on, the gateway intercepts all requests, applying your predefined rules to manage traffic, enforce cost limits, implement latency-based routing, and ensure responses are safe and compliant before they reach the end-user. The system maintains a single centralized API key with role-based access control, eliminating the need to manage multiple provider keys.

Use Cases

With a clear understanding of its mechanics, let’s explore where TrueFoundry’s AI Gateway truly shines. It is an ideal solution for a variety of enterprise-level challenges:

- Managing Thousands of Enterprise Agents: When your AI deployment scales, the gateway provides the centralized control needed to manage a large and diverse fleet of agents without chaos, with full request/response logging and customizable retention.

- Enforcing Corporate AI Policies: Ensure all agent interactions adhere to strict corporate guidelines, data privacy rules, and brand safety standards by implementing universal guardrails that scan inputs for injection attacks and outputs for policy violations.

- Debugging Complex Agent Flows: The deep observability and tracing capabilities allow developers to quickly pinpoint and resolve issues within intricate, multi-step agent interactions, showing tool calls, intermediate steps, and guardrail interventions.

- Cost Control for LLM Usage: For organizations leveraging expensive large language models, the gateway is essential for monitoring and capping API costs through token-based quotas and cost budgets, ensuring projects stay on budget with comprehensive usage tracking.

- Geo-Fenced AI Deployments: Meet regional data laws through geo-aware routing rules and data residency enforcement, ensuring data never leaves specific regions or countries as required by GDPR, HIPAA, and other regulatory frameworks.

- Multi-Model RAG Systems: Optimize cost and latency through weighted routing across different models, with intelligent caching strategies that reduce redundant API calls.

Pros \& Cons

While the applications are compelling, it’s important to look at both sides of the coin to determine if this tool is the right fit for your needs.

Advantages

- Production-Ready Governance: Built from the ground up for enterprise scale, offering robust tools for security, compliance, and operational control with SOC 2, HIPAA, and GDPR certifications.

- Model Agnostic: The gateway is not tied to any specific model provider, giving you the flexibility to use the best LLMs for the job from any source, including 250+ models from major cloud platforms and specialized providers.

- Deep Observability: Provides granular insights into every aspect of your agent’s performance, from latency and cost to the content of responses, with full request/response logging and export capabilities.

- Sub-3ms Latency: Maintains extremely low internal latency with intelligent routing and load balancing, ensuring real-time application performance.

- Flexible Deployment: Supports on-premises, VPC, and cloud deployments with no data egress requirements, addressing data sovereignty concerns for regulated industries.

Disadvantages

- Adds Infrastructure Complexity: As a middleware solution, it introduces an additional layer to your tech stack that needs to be managed and maintained, with Helm-based management for self-hosted models.

- Primarily B2B/Enterprise Focused: The feature set and pricing are tailored for large organizations, which may make it less accessible for individual developers or small startups. Pricing information is not publicly disclosed and requires direct engagement with the vendor.

- Learning Curve: While the playground simplifies experimentation, fully leveraging enterprise features like RBAC, custom guardrails, and multi-region deployments requires technical expertise and organizational change management.

- Vendor-Specific Ecosystem: While model-agnostic, the platform’s unique features create some degree of dependency on TrueFoundry’s infrastructure and update cycles.

How Does It Compare?

TrueFoundry AI Gateway vs. Portkey

Portkey is a comprehensive LLM orchestration platform offering unified API access to 1600+ LLMs with prompt management, conditional routing, and observability features.

Scope:

- TrueFoundry: Positions itself as an “enterprise control plane” emphasizing agent-scale governance, MCP Gateway integration, and production reliability for F100 companies managing thousands of agents

- Portkey: Markets as “E2E LLM Orchestration” with broader developer audience targeting, featuring a Prompt Engineering Studio and “MCP Client” functionality

Core Strengths:

- TrueFoundry: Sub-3ms latency, 99.99% uptime SLA, air-gapped/on-prem deployments, proven scalability (10B+ monthly requests), 30% average cost reduction through smart routing

- Portkey: Dedicated Prompt Engineering Studio with build/test/deploy workflows, extensive conditional routing with fallbacks/retries/load balancing, strong LLM observability with real-time dashboards

Deployment:

- TrueFoundry: Cloud, on-premises, VPC, air-gapped environments with Helm-based management

- Portkey: Cloud-managed, Docker, Kubernetes, self-hosted options with hybrid deployment flexibility

Pricing:

- TrueFoundry: Not publicly disclosed; requires enterprise sales contact

- Portkey: Developers (Free), Production (\$49/mo), Enterprise (Custom)

When to Choose TrueFoundry: For maximum production reliability, sub-3ms latency requirements, on-premises/air-gapped deployments, proven F100-scale infrastructure, and when MCP Gateway security is critical.

When to Choose Portkey: For dedicated prompt engineering workflows, more accessible pricing for mid-market teams, faster onboarding with clear tier structure, and when prompt versioning/testing is a primary workflow.

TrueFoundry AI Gateway vs. Helicone

Helicone is an open-source LLM observability platform (YC W23) that provides monitoring, evaluation, and experimentation capabilities with one line of code integration.

Core Focus:

- TrueFoundry: Full-stack AI Gateway with governance, routing, caching, quotas, and control plane capabilities beyond observability

- Helicone: Specialized in observability, analytics, and evaluation with lightweight integration (proxy logging via Cloudflare Workers)

Architecture:

- TrueFoundry: Enterprise middleware with sub-3ms latency, Rust-based gateway, comprehensive request interception and policy enforcement

- Helicone: Web platform (NextJS), Cloudflare Workers for proxy logging, ClickHouse for analytics, Minio for storage—optimized for observability at scale

Feature Comparison:

- TrueFoundry: Unified access (250+ models), guardrails, rate limiting, MCP Gateway, prompt management playground, cost/volume controls, RBAC

- Helicone: Full request/response logs, token tracking, latency analysis, playground for testing, prompt management, evaluations (LastMile, Ragas), fine-tuning partner integration

Security \& Compliance:

- TrueFoundry: SOC 2, HIPAA, GDPR certified; enterprise-grade RBAC, on-prem deployment, data residency enforcement

- Helicone: SOC 2 and GDPR compliant; focuses on observability security rather than comprehensive governance

Pricing:

- TrueFoundry: Enterprise pricing (not disclosed)

- Helicone: Generous free tier (10k requests/month), no credit card required; paid tiers for higher volumes

When to Choose TrueFoundry: For comprehensive AI Gateway with governance, quota management, multi-region routing, enterprise security requirements, and when you need control plane beyond observability.

When to Choose Helicone: For lightweight observability with one-line integration, generous free tier, strong evaluation/fine-tuning workflows, and when you don’t need comprehensive gateway governance.

TrueFoundry AI Gateway vs. LangSmith

LangSmith is LangChain’s observability and evaluation platform designed for monitoring, debugging, and optimizing LLM applications with end-to-end tracing.

Core Focus:

- TrueFoundry: Enterprise AI Gateway providing unified model access, routing, governance, and control plane capabilities

- LangSmith: LLM observability and evaluation platform specializing in trace analysis, prompt evaluation, and application debugging

Architecture:

- TrueFoundry: Middleware gateway intercepting all requests with policy enforcement, intelligent routing, and centralized API management

- LangSmith: Monitoring platform integrated with LangChain (but works independently) providing trace visualization, evaluator frameworks, and dataset management

Feature Comparison:

- TrueFoundry: 250+ model access, rate limiting, cost controls, guardrails, MCP Gateway, playground with code generation, RBAC

- LangSmith: End-to-end tracing, waterfall visualization, structured evaluators (Pydantic-based), dataset creation, prompt versioning, individual run inspection

Use Case Differentiation:

- TrueFoundry: Production infrastructure for managing model access, enforcing policies, controlling costs, routing traffic, and maintaining uptime SLAs

- LangSmith: Development/production monitoring for understanding chain execution, evaluating prompt quality, debugging errors, and optimizing workflows

Integration:

- TrueFoundry: Works with any LLM provider; integrates with Langfuse and OpenTelemetry for observability

- LangSmith: Native LangChain integration but framework-agnostic; focuses on trace-level insights rather than infrastructure management

When to Choose TrueFoundry: For enterprise infrastructure managing hundreds of agents, enforcing security policies, controlling API costs, providing unified model access, and requiring production SLAs.

When to Choose LangSmith: For deep application-level debugging, prompt evaluation against reference answers, understanding execution flows, optimizing chain performance, and when using LangChain frameworks.

TrueFoundry AI Gateway vs. LiteLLM

LiteLLM is a robust open-source proxy gateway supporting 100+ LLMs with strong authentication, rate limiting, and logging capabilities.

Performance:

- TrueFoundry: Sub-3ms internal latency, 99.99% uptime, optimized for production scale (10B+ requests/month)

- LiteLLM: Each request adds >50ms latency according to independent benchmarks; resource-intensive at high volumes

Deployment:

- TrueFoundry: Cloud-managed, on-premises, VPC, air-gapped with enterprise support and SLA-backed reliability

- LiteLLM: Self-hosted only (Docker, Kubernetes); enterprise support available through custom pricing

Feature Comparison:

- TrueFoundry: Native MCP Gateway, playground with code generation, geo-aware routing, sub-3ms latency, comprehensive RBAC

- LiteLLM: Strong customization, active community, 15+ native observability integrations (Helicone, Langfuse), good for custom infrastructure

Learning Curve:

- TrueFoundry: <5 minutes setup with playground UI and pre-configured templates

- LiteLLM: 15-30 minutes setup; highly technical with steep learning curve requiring infrastructure expertise

Pricing:

- TrueFoundry: Enterprise pricing (contact sales)

- LiteLLM: Free (self-hosted), Enterprise (custom)

When to Choose TrueFoundry: For production-ready infrastructure with guaranteed latency/uptime, enterprise security requirements, managed services, playground-driven development, and MCP Gateway needs.

When to Choose LiteLLM: For engineering teams building highly custom LLM infrastructure, strong community support needs, extensive observability integrations, and when cost constraints favor self-hosting over managed services.

Key Market Positioning

TrueFoundry positions itself uniquely in the AI Gateway market by emphasizing enterprise-grade control plane capabilities rather than pure observability or simple routing. Compared to point solutions:

- vs. Observability Tools (Helicone, LangSmith): TrueFoundry adds comprehensive governance, rate limiting, guardrails, and unified model access that observability platforms lack

- vs. LLM Routers (OpenRouter, Unify AI): TrueFoundry provides enterprise security, RBAC, on-prem deployment, and production SLAs beyond basic routing

- vs. Open-Source Gateways (LiteLLM): TrueFoundry offers managed services, sub-3ms latency, playground UI, and proven F100-scale reliability

The platform’s proven deployment across multiple Fortune 100 companies managing thousands of agents, combined with certifications (SOC 2, HIPAA, GDPR) and deployment flexibility (cloud/on-prem/VPC/air-gapped), establishes it as a production-focused solution for organizations scaling AI responsibly.

Final Thoughts

TrueFoundry’s AI Gateway is more than just an observability tool; it’s a comprehensive command center for your enterprise AI strategy. For organizations struggling to manage the complexity, cost, and compliance of a growing number of AI agents, it provides an essential layer of control and visibility. The platform’s Product Hunt launch with 226 upvotes and positive community reception validates its market fit, while deployment across multiple Fortune 100 companies demonstrates production readiness.

The gateway’s sub-3ms latency, 99.99% uptime guarantees, and proven scalability (10B+ monthly requests) address critical infrastructure concerns for enterprise AI deployments. The unified access to 250+ models through a single API endpoint with centralized authentication eliminates operational complexity, while cost optimization features deliver an average 30% cost reduction through intelligent routing and caching.

However, prospective users should consider trade-offs: lack of publicly disclosed pricing requires enterprise sales engagement, the additional infrastructure layer introduces complexity, and the learning curve for advanced features may require organizational change management. The platform’s enterprise focus makes it potentially overkill for smaller projects or individual developers.

For large-scale, production-grade AI deployments requiring governance, compliance, and operational efficiency, TrueFoundry’s AI Gateway delivers invaluable capabilities. Organizations deploying hundreds or thousands of agents, operating in regulated industries requiring data sovereignty, or struggling with multi-model management complexity will find the platform addresses critical pain points. The integration with existing tools (LangChain, VectorDBs, Guardrails) through modular, API-driven architecture ensures it complements rather than replaces existing workflows.

If you’re serious about scaling your AI initiatives responsibly with enterprise-grade security, compliance, and performance, TrueFoundry’s AI Gateway deserves a very close look. However, smaller teams or projects should evaluate whether simpler alternatives like Helicone (generous free tier) or LiteLLM (open-source self-hosting) better match their scale and budget constraints.