Table of Contents

Voxtral Transcribe 2 by Mistral

Voxtral Transcribe 2 delivers ultra-fast, highly accurate speech-to-text with real-time transcription and speaker diarization. Mistral’s second-generation audio family includes Voxtral Mini Transcribe V2 for precise batch processing and Voxtral Realtime for live streaming applications, achieving industry-leading latency configurable down to sub-200ms.

Key Features

- Speaker Diarization: Accurately separates and labels distinct speakers in a recording (ideal for meetings and interviews).

- Word-Level Timestamps: Provides precise start and end times for every transcribed word, enabling perfect subtitle alignment.

- Ultra-Low Latency Streaming: The Realtime model features a native streaming architecture capable of sub-200ms latency, making it viable for conversational voice agents.

- Context Biasing: Allows users to inject up to ~100 domain-specific terms (like product names or acronyms) to boost accuracy on rare vocabulary.

- 13 Supported Languages: Optimized for multilingual transcription including English, French, German, Spanish, Chinese, Japanese, and more.

- Privacy-First Deployment: Available via API, but importantly, the Realtime model weights are open-sourced (Apache 2.0) for on-premise or edge deployment.

How It Works

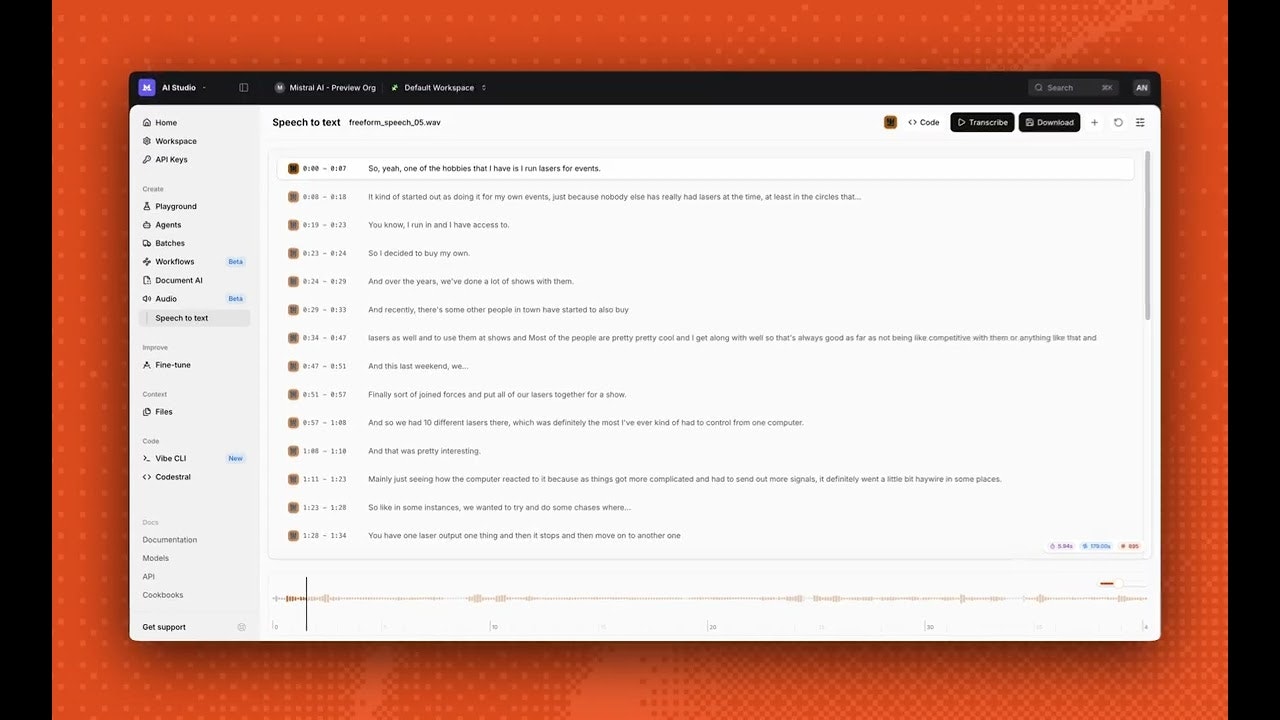

Developers can access Voxtral via Mistral’s API platform (La Plateforme) or test it directly in the Mistral Studio playground. For batch workflows (e.g., uploading a 1-hour meeting recording), the Mini Transcribe V2 model processes the file to return a transcript with speaker labels and timestamps. For live applications (e.g., a customer service voice bot), developers connect to the Voxtral Realtime websocket endpoint or self-host the open-weights model to stream audio chunks and receive text instantly with minimal delay.

Use Cases

- Conversational Voice Agents: Powering AI agents that need to interrupt and respond instantly without the awkward 2-second pause of traditional APIs.

- Live Meeting Intelligence: Generating real-time transcripts and action items for Zoom/Teams meetings with accurate speaker attribution.

- Media Subtitling: Automating caption creation for videos with frame-perfect synchronization using word-level timestamps.

- Contact Center Analytics: Analyzing support calls for sentiment and compliance while keeping sensitive audio data on-premise using the open-weight model.

Pros and Cons

- Pros: Sub-200ms Latency is best-in-class for voice bots; Open Weights (Apache 2.0) for the Realtime model is a huge win for privacy and cost control; Diarization is now native (was missing in V1); Extremely competitive pricing ($0.003/min); Supported by a major European AI lab ensuring GDPR alignment.

- Cons: Limited Language Support (13 languages) compared to Whisper (99+) or Deepgram (30+); Self-hosting the open model requires significant GPU engineering expertise; Accuracy on extremely noisy audio may still trail larger, slower models.

Pricing

- Voxtral Mini Transcribe V2 (Batch): $0.003 / minute (approx. $0.18/hour).

- Voxtral Realtime (Streaming): $0.006 / minute (approx. $0.36/hour).

- Open Weights: Free to download and run on your own infrastructure (Apache 2.0).

How Does It Compare?

Voxtral Transcribe 2 aggressively targets the “Real-Time” and “Open” niches in the speech market.

- OpenAI Whisper: The standard for accuracy but too slow for real-time. Whisper lacks a native streaming mode (requires chunking hacks) and has high latency. Voxtral Realtime is built for streaming from day one. However, Whisper supports far more long-tail languages.

- Deepgram Nova-3: The reigning speed champion. Deepgram is Voxtral’s closest rival, also offering sub-300ms latency and high accuracy. Voxtral competes by offering an Open Weights option (Deepgram is closed only) and slightly lower list pricing for batch processing.

- AssemblyAI Universal-1: Excellent for “Intelligence” (summarization, LEMur), but typically slower and more expensive for pure transcription. Voxtral is a better “raw engine” choice if you just want text fast and cheap.

- Google / AWS: Legacy cloud providers often charge $0.024/min (8x more expensive) and have 1000ms+ latency. Voxtral makes them look obsolete for modern voice agent use cases.

Final Thoughts

Mistral’s Voxtral Transcribe 2 is a strategic move to own the Voice Agent infrastructure stack. By open-sourcing the Realtime model, they are giving developers something OpenAI and Deepgram refuse to share: the keys to the engine. For any company building a privacy-sensitive voice bot (e.g., healthcare, banking) or simply wanting to cut their transcription bill by 80%, this is now the default open alternative to Whisper.