Table of Contents

Overview

Wan 2.6 is an advanced open-source multimodal AI video generation model developed by Alibaba that transforms text and visual inputs into cinematic-quality videos. The model represents a significant evolution from previous versions, enabling creators to generate coherent, professional-grade visual narratives with unprecedented control over motion, lighting, lenses, and aesthetic properties. Built on diffusion transformer architecture, Wan 2.6 supports multi-shot storytelling with intelligent scene scheduling, consistent character casting from reference videos, and native audio-visual synchronization with realistic lip-sync capabilities.

Key Features

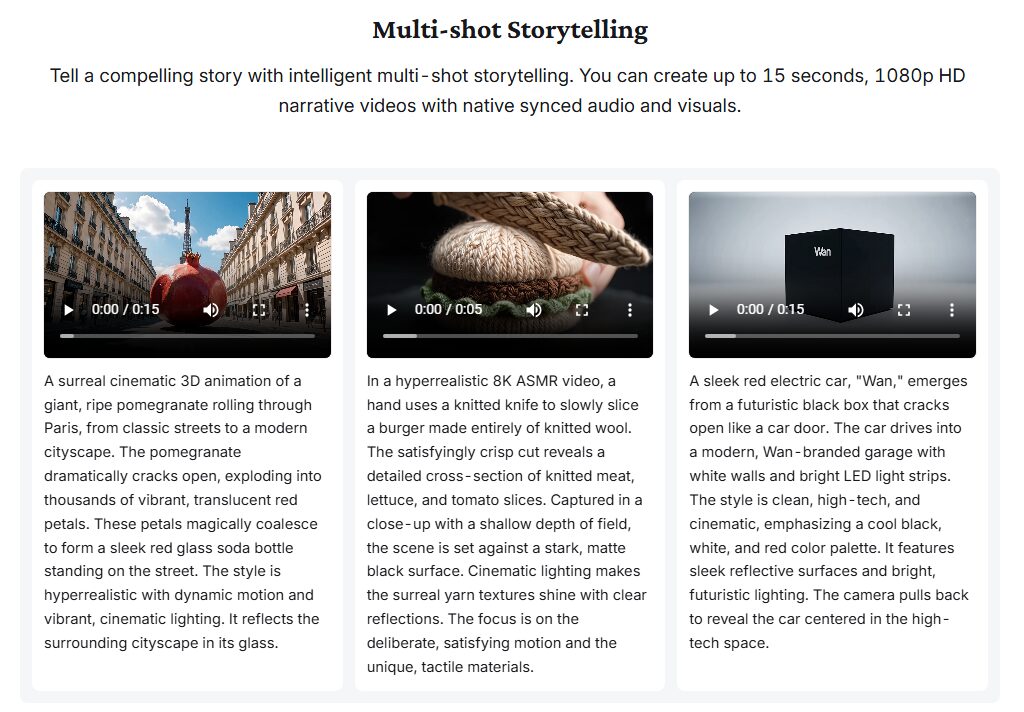

- 15-Second 1080p Generation: Generates up to 15 seconds of HD video at 1080p resolution and 24 frames per second with minimal quality degradation

- Multi-Shot Intelligent Storytelling: Automatically schedules multiple shots within a single video while maintaining consistent characters, settings, and atmosphere

- Reference Video Generation: Preserves visual identity and voice characteristics from 5-second reference videos, supporting single-character and multi-character synchronized performances

- Native Audio-Visual Synchronization: Integrates background soundscapes, dialogue, and sound effects with precise lip-sync matching to visual movement

- Advanced Motion Control: Precise command over camera movements, character motion patterns, and dynamic object behavior

- Multimodal Input Support: Accepts text prompts, reference images, audio cues, and reference videos for guided generation

- Open-Source Architecture: Available under Apache 2.0 license with both 1.3B (consumer-grade) and 14B (professional) parameter models

- Voice Consistency: Maintains voice characteristics across generated videos when using speech-containing reference videos

How It Works

Users provide generation inputs through multiple modalities: text prompts describing the scene, reference images or videos for visual consistency, and audio specifications for synchronization. Wan 2.6’s diffusion transformer processes these inputs through a custom VAE architecture that preserves temporal coherence. The model automatically detects scene transitions and schedules multi-shot sequences based on narrative logic embedded in prompts. For videos with reference content, the system extracts visual and voice characteristics and maintains consistency throughout generation. The output is rendered at 1080p with native audio integration and synchronized lip-sync for dialogue sequences.

Use Cases

- Filmmaking: Produce cinematic sequences and music videos with professional motion and character consistency

- Advertising: Create branded content with consistent characters and messaging across multiple video formats

- Content Creation: Generate social media content, YouTube videos, and streaming platform material at scale

- Marketing Campaigns: Produce marketing narratives with consistent brand voice and visual identity

- Game Development: Generate in-game cinematics, cutscenes, and cinematic trailers with consistent character performance

- Educational Content: Create instructional videos and animated educational material with consistent narration and visual style

Pros \& Cons

Advantages

- High Resolution: 1080p generation provides professional-quality output suitable for publication and broadcast

- Realistic Motion: Advanced motion synthesis produces fluid camera movements and character animation without jittering

- Open Source: Apache 2.0 licensed code and model weights enable unlimited commercial use and customization

- Character Consistency: Reference video input reliably maintains visual and voice identity across scenes

- Multi-Platform: Available through cloud services and local deployment options for diverse user needs

- Audio Integration: Native audio-visual sync eliminates separate audio editing workflows

Disadvantages

- High Compute Requirements: 14B model demands significant GPU resources; 1.3B version requires 8.19 GB VRAM minimum

- Limited Customization (Free Tier): Cloud versions may restrict advanced parameter tuning compared to self-hosted deployments

- Learning Curve: Multi-shot prompt engineering requires understanding of cinematic terminology and narrative structure

- Reference Quality Dependency: Output quality depends heavily on reference video/image quality and clarity

- Storage Requirements: 15-second 1080p videos generate large file sizes requiring substantial storage and bandwidth

How Does It Compare?

Sora 2

- Key Features: Text-to-video generation, environmental consistency focus, synchronized dialogue and soundscapes, advanced world state persistence

- Strengths: Superior environmental consistency, immersive background soundscapes, seamless physics simulation, professional film-grade quality, advanced spatial coherence

- Limitations: Proprietary and expensive (estimated \$20/month+), limited API access, no voice cloning from reference videos, less character-focused

- Differentiation: Sora 2 emphasizes environmental immersion and world persistence; Wan 2.6 specializes in character consistency and voice cloning

Runway Gen-3

- Key Features: High-fidelity video generation, temporal coherence optimization, multimodal input support, advanced character and scene control

- Strengths: Excellent temporal consistency, strong motion quality, professional adoption, web-based interface, comprehensive API

- Limitations: Paid service (\$10-28/month), less emphasis on voice cloning, limited open-source ecosystem, requires subscription for advanced features

- Differentiation: Runway Gen-3 is a commercial service with professional features; Wan 2.6 is open-source with emphasis on character voice consistency

Kling 2.6

- Key Features: Fast video generation, extended length support, AI voice generation, character consistency, style transfer capabilities

- Strengths: Fast generation speed, native AI voice generation integrated, good character consistency, extended video length (up to 10 minutes)

- Limitations: Limited reference video control, voice cloning capabilities lag behind Wan 2.6, proprietary model (not open-source), primarily Chinese platform

- Differentiation: Kling 2.6 focuses on speed and extended length; Wan 2.6 emphasizes reference video fidelity and voice preservation

Open-Sora

- Key Features: Open-source video generation, comprehensive task support (text-to-image, text-to-video, image-to-video), modular architecture

- Strengths: Fully open-source, broader task coverage, modular design enabling customization, freely available model weights

- Limitations: Earlier stage of development, less mature than Wan 2.6, fewer AI-specific features like voice cloning, smaller community adoption

- Differentiation: Open-Sora is foundational framework; Wan 2.6 is more production-ready with advanced features

Pika 2

- Key Features: Real-time video generation preview, extended video creation, character controller, physics-aware generation

- Strengths: Real-time feedback during generation, excellent for prototyping, good physics simulation, creative tools integration

- Limitations: Proprietary and subscription-based, less emphasis on reference consistency, primarily web-based, smaller model ecosystem

- Differentiation: Pika 2 emphasizes real-time creative workflow; Wan 2.6 prioritizes reference fidelity and voice consistency

Final Thoughts

Wan 2.6 represents a significant advancement in open-source video generation, particularly for creators prioritizing character consistency, voice preservation, and narrative coherence. The model’s multi-shot storytelling capabilities and reference video support enable sophisticated cinematic workflows previously requiring manual editing or expensive proprietary services.

The open-source nature and dual model sizing (1.3B for accessibility, 14B for quality) make Wan 2.6 exceptionally accessible. Unlike proprietary competitors, creators retain full control over model training, deployment, and customization. The Apache 2.0 license permits unrestricted commercial use without per-video fees or subscription requirements.

However, high compute requirements for optimal performance and the learning curve required for effective multi-shot prompt engineering mean the platform best serves technically proficient creators. The competition from proprietary solutions like Sora 2 and Runway Gen-3 offers superior environmental consistency and immersion, making tool selection dependent on creative priorities.

For independent creators, content studios, and organizations requiring affordable, customizable video generation with strong character and voice consistency, Wan 2.6 offers compelling value. For filmmakers prioritizing environmental realism and immersive world-building, proprietary competitors may better suit specific needs.

As the open-source video generation landscape matures, Wan 2.6’s emphasis on transparency, accessibility, and community contribution positions it as a foundational tool for democratizing professional-grade video creation.