Table of Contents

Overview

In the ever-evolving landscape of AI, the push for transparency and accessibility is paramount. Enter Instella, a suite of fully open-source 3-billion-parameter language models developed by AMD. Optimized for high-performance AI tasks and trained on MI300X GPUs, Instella is designed to empower researchers, academics, and open-source enthusiasts. Let’s dive into what makes Instella a noteworthy contender in the open-source AI arena.

Key Features

Instella boasts a compelling set of features that make it a valuable tool for various AI endeavors. Here’s a breakdown:

- 3B parameter language models: Offers a balance between size and performance, suitable for a range of NLP tasks.

- Fully open-source: Weights and code are released under RAIL and MIT licenses respectively, fostering collaboration and innovation.

- Trained on MI300X GPUs: Optimized for AMD’s high-performance hardware, ensuring efficient training and inference.

- 36 decoder layers: Provides a deep architecture for capturing complex language patterns.

- 32 attention heads: Enables the model to focus on different parts of the input sequence, improving accuracy.

- Supports up to 4,096 token sequence length: Allows for processing longer pieces of text, enhancing contextual understanding.

- FlashAttention-2: Accelerates attention computations, leading to faster performance.

- Torch Compile: Optimizes the model for PyTorch, streamlining development and deployment.

- Fully Sharded Data Parallelism: Enables efficient training across multiple GPUs.

How It Works

Instella models employ a decoder-only architecture, consisting of 36 layers, to generate text. The models leverage advanced memory optimization techniques to efficiently manage training and inference. The process begins with the OLMo tokenizer, which converts text into numerical representations. During training, a hybrid sharding strategy is employed across high-performance AMD GPUs, distributing the computational load and accelerating the learning process. This meticulous approach ensures that Instella can handle complex language tasks with relative ease.

Use Cases

Instella’s open-source nature and performance capabilities make it suitable for a variety of applications. Here are some key use cases:

- AI research: Provides a transparent and accessible platform for exploring novel AI techniques.

- Academic model development: Enables students and researchers to build upon and customize a powerful language model.

- Natural language understanding: Facilitates the development of applications that can understand and interpret human language.

- Language model benchmarking: Offers a standardized model for comparing the performance of different AI architectures.

- Open-source model innovation: Encourages community contributions and the creation of new and improved language models.

Pros & Cons

Like any tool, Instella has its strengths and weaknesses. Let’s weigh the advantages and disadvantages.

Advantages

- Fully open-source, promoting transparency and collaboration.

- High-performance on AMD GPUs, ensuring efficient operation.

- Transparent training process, fostering trust and understanding.

- Strong academic focus, encouraging research and education.

Disadvantages

- Restricted commercial use under RAIL, limiting certain business applications.

- 3B size limits certain advanced tasks, potentially hindering performance on complex problems.

- Dependency on AMD ecosystem for optimal performance, requiring specific hardware.

How Does It Compare?

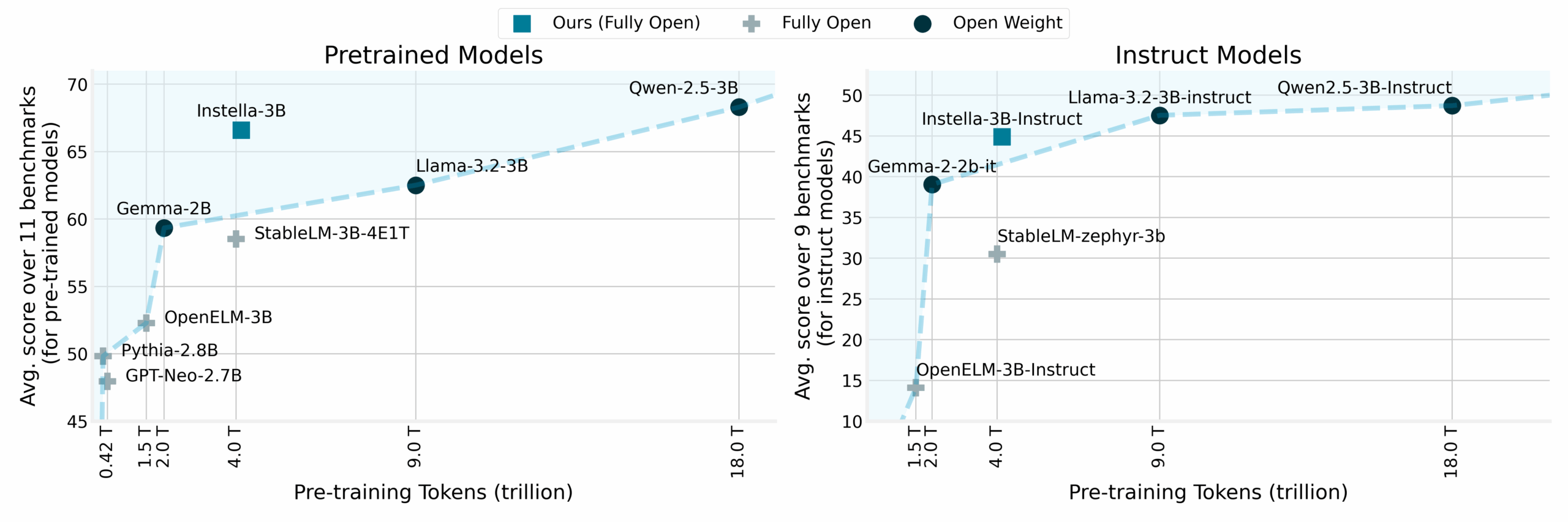

When considering open-source language models, Instella finds itself in a competitive landscape. Here’s how it stacks up against some notable alternatives:

- Llama-3.2-3B: Similar size, but different training stack. Instella’s advantage lies in its optimization for AMD GPUs.

- Gemma-2-2B: Smaller, less compute-intensive. Instella offers a larger model size for potentially better performance on certain tasks.

- Qwen-2.5-3B: Comparable in model scale and openness. The choice between Instella and Qwen-2.5-3B may depend on specific hardware preferences and licensing considerations.

Final Thoughts

Instella represents a significant step forward in the open-source AI movement. Its commitment to transparency, coupled with its optimization for AMD hardware, makes it a compelling option for researchers, academics, and anyone seeking to explore the potential of language models. While its commercial use is restricted and its performance is tied to the AMD ecosystem, Instella’s contributions to the open-source community are undeniable. It’s a tool to watch as the AI landscape continues to evolve.