Table of Contents

Overview

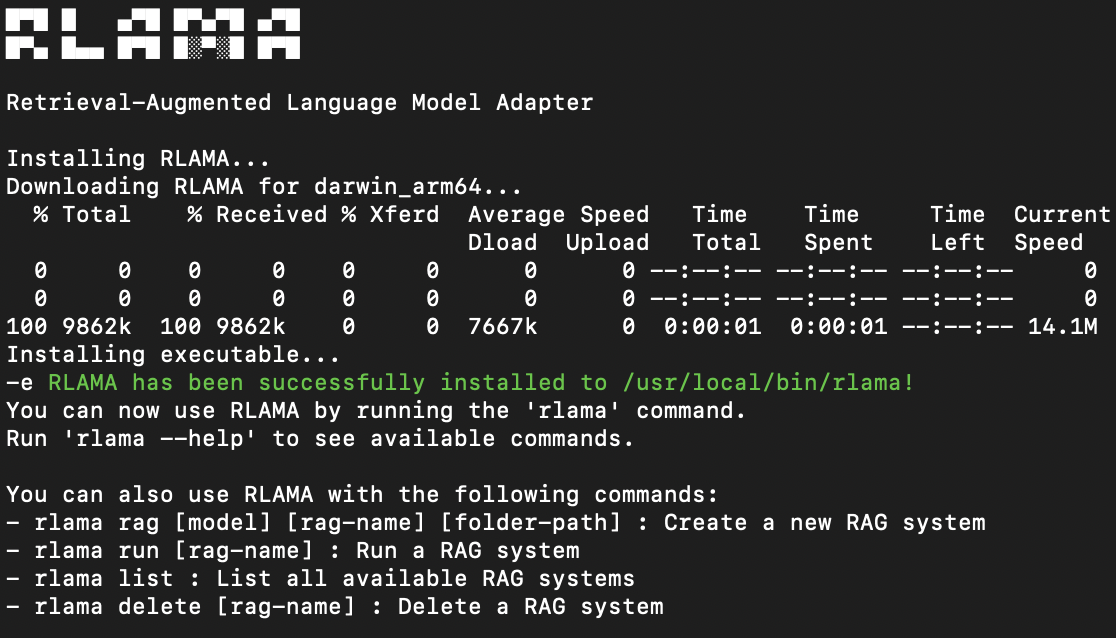

In today’s data-driven world, the need for secure and private AI solutions is paramount. Enter RLAMA (Retrieval-Augmented Local Assistant Model Agent), an open-source tool that empowers you to deploy AI assistants directly on your local infrastructure. Forget sending sensitive data to external servers; RLAMA brings the power of large language models to your doorstep, ensuring complete control and enhanced privacy. Let’s dive into what makes RLAMA a compelling option for building your own AI-powered applications.

Key Features

RLAMA boasts a range of features designed for seamless local AI deployment:

- Local Deployment: Run your AI assistant entirely on your own hardware, ensuring data privacy and security.

- Retrieval-Augmented Generation (RAG): Leverages RAG pipelines to provide context-aware and accurate responses based on your local documents.

- Open-Source: Benefit from a transparent and customizable platform, fostering community contributions and continuous improvement.

- Compatible with Leading Technologies: Seamlessly integrates with popular large language models like Mistral and LLaMA, as well as vector databases like Chroma and Weaviate.

- Supports Streaming Responses: Experience real-time interactions with your AI assistant, providing a more natural and engaging user experience.

- Lightweight and Containerized Setup: Simplifies deployment and management with a containerized environment, ensuring consistency across different systems.

How It Works

RLAMA operates by creating a self-contained environment where a language model works in tandem with a vector database. The process begins when a user submits a query. RLAMA then uses similarity search within the vector database to retrieve relevant documents. These documents are then fed into the language model, providing the context needed to generate an informed and accurate response. The beauty of this system is that the entire process occurs locally, eliminating the need for external servers and ensuring data privacy. This also significantly reduces latency, leading to faster response times.

Use Cases

RLAMA’s local and secure nature makes it suitable for a variety of applications:

- Internal Enterprise Assistants: Create AI assistants that can answer employee questions, retrieve internal documents, and streamline workflows, all while keeping sensitive company data secure.

- Research Paper Summarization: Quickly summarize research papers and extract key findings, accelerating the research process.

- Legal and Compliance Query Handling: Automate the process of answering legal and compliance questions, ensuring accuracy and consistency.

- Personalized AI Tutors: Develop personalized learning experiences that adapt to individual student needs, providing tailored support and guidance.

- Offline AI Support Systems: Deploy AI assistants in environments with limited or no internet connectivity, such as remote locations or secure facilities.

Pros & Cons

Like any tool, RLAMA has its strengths and weaknesses. Let’s take a look:

Advantages

- Complete Local Control: Maintain full control over your data and AI models, ensuring privacy and security.

- Enhanced Data Privacy: Protect sensitive information by keeping all data processing within your local infrastructure.

- No Internet Required: Operate your AI assistant in offline environments, making it ideal for secure or remote locations.

- Open-Source and Extensible: Customize and extend RLAMA to meet your specific needs, leveraging the power of the open-source community.

Disadvantages

- Requires Significant Local Resources: Running large language models locally can demand substantial computing power and storage.

- Setup Can Be Complex for Non-Technical Users: Initial setup and configuration may require technical expertise, potentially posing a barrier to entry for some users.

- Limited Scalability Without Infrastructure: Scaling RLAMA to handle a large number of users or complex queries may require significant infrastructure investments.

How Does It Compare?

When considering local AI solutions, it’s important to understand how RLAMA stacks up against the competition:

- PrivateGPT: While PrivateGPT also focuses on local AI, RLAMA offers a more modular and containerized approach, simplifying deployment and management.

- LocalAI: LocalAI provides a broader range of AI capabilities, but RLAMA is specifically designed for retrieval-augmented generation (RAG) pipelines, making it a better choice for applications requiring context-aware responses.

- GPT4All: GPT4All provides a simplified way to run language models locally, but RLAMA integrates vector database support out-of-the-box, enabling more sophisticated and accurate retrieval-augmented generation.

Final Thoughts

RLAMA offers a compelling solution for organizations and individuals seeking to deploy AI assistants locally, prioritizing data privacy and control. While the setup process may require some technical expertise and local resources, the benefits of enhanced security, offline functionality, and open-source flexibility make RLAMA a powerful tool for a wide range of applications. If you’re looking for a robust and customizable platform for building your own private AI assistant, RLAMA is definitely worth exploring.